DoltLab 101: Services Overview and 2022 Roadmap

We launched our latest product DoltLab earlier this year to provide users who don't want to push their data to the public internet a self-hosted DoltHub.

Since DoltLab's launch, we've been working to make deploying and operating DoltLab smooth and easy, while also working to overcome the challenges we've encountered revamping our DoltHub code base to fully support both products.

The DoltLab and DoltHub source code is not yet open, but in the meantime, I wanted to give DoltLab users, and prospective users, a quick overview of the DoltLab services so that they have a more solid mental model of how the suite of services that comprise DoltLab perform their functions.

This blog will not cover how to run or operate DoltLab, which we've already described in detail here, with some required setup tweaks for v0.2.0 described here, and an ubuntu bootstrap script linked here, which makes getting started running DoltLab a cinch.

Instead, in this blog I'll briefly discuss each service included in DoltLab and I'll also reveal our tentative DoltLab roadmap! Let's dive in 🐬!

TL;DR

DoltLab Services Overview

- PostgreSQL Server

- DoltLab Remote API Server

- DoltLab Remote Data Server

- DoltLab API Server

- DoltLab GraphQL API Server

- DoltLab UI Server

- DoltLab File Service API Server

- DoltLab Envoy Proxy Server

DoltLab Roadmap

DoltLab Services Overview

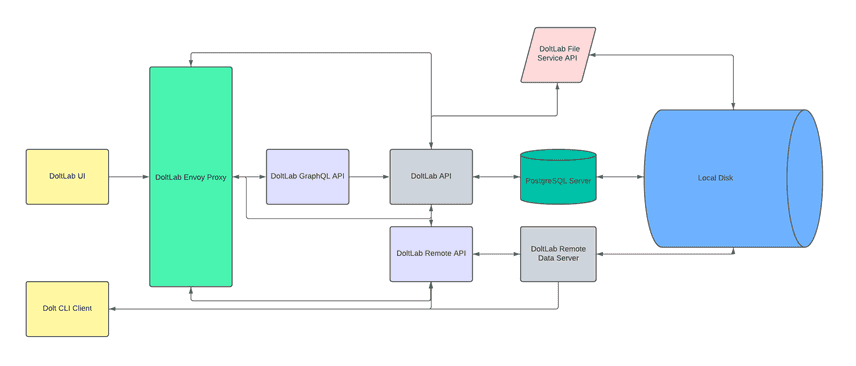

We currently publish DoltLab as a zip folder that contains a docker-compose.yaml used to run DoltLab's services. Docker Compose orchestrates the eight services that make up DoltLab, and uses a host's local disk whenever a service needs to persist data. Here's an illustration of what this looks like:

This illustration shows all eight services and a simplified representation of their communication pathways, represented by the arrows between the services. Let's briefly look at each service, it's function, and how it interacts with other services within DoltLab.

PostgreSQL Server

DoltLab ships with a PostgreSQL(PG) Server image that contains the schema required to run DoltLab. This PG server is used by DoltLab's API to store users, database information, pull requests, credentials, etc. just about everything needed for DoltLab to work.

When a new user creates an account on DoltLab, the frontend calls an RPC endpoint on DoltLab's API, which writes the new user's information to the PG database. The DoltLab API is the only service able to communicate with the PG server.

This works the same way a lot of applications do, so there's nothing particularly fancy going on here. The only major difference between this PG server instance and ones in other applications is that, oftentimes, a database server will run on a separate host from the one running the main application. For now, DoltLab runs all services on a single host and it's PG server persists data to a Docker volume backed by local disk.

When a user creates and pushes data to a new database on DoltLab, though, only the database's metadata and a unique identification number are stored in the PG database, not the actual DoltLab database data.

Instead, DoltLab database data is stored in a separate location on disk, managed by two different services called the DoltLab Remote API and the DoltLab Remote Data Server.

DoltLab Remote API Server and DoltLab Remote Data Server

The DoltLab Remote API server is an API used for interacting with Dolt remote data, accessible by both the DoltLab API and the Dolt CLI client through DoltLab's Envoy Proxy, which we will touch on a bit later.

When Dolt data is pushed, pulled, or cloned from a DoltLab remote, requests for the data hit DoltLab's Remote API. The Remote API then locates the database data specified in the request, authenticates the request, and uses the Dolt Remote Data Server to serve the data from Dolt table-files stored on the host's local file system.

The Remote Data Server is a simple, local file server that serves Dolt table-files over http to clients. The Remote API is actually the service that manages how these files are persisted, how their data is accessed, who has permissions to access the data in these files, and the particular backing store used to store these files.

Interestingly, DoltHub also uses the same Remote API as DoltLab, however it doesn't require a separate Remote Data Server to serve Dolt table-files from local disk, since DoltHub's Remote API serves these files directly from S3.

In the current implementation of DoltLab, the Remote API listens for connections on port 50051 and the Remote Data Server listens for connections on port 100. Both ports need to be open on the host running DoltLab so that Dolt clients can connect with DoltLab's Remote API on port 50051 for clones, pushes, and pulls and so that the Dolt clients, which currently expect to receive an http GET-table URL to download table-files, can do so over port 100, directly from the Remote Data Server.

DoltLab API Server

The next major service used in DoltLab is it's main API, simply called the DoltLab API. This API implements the core functionality required for DoltLab (and DoltHub) to work. This API is a gRPC service that supports all of the functionality you see on DoltLab including database creation, searching, pull request merging, and online data editing.

The DoltLab API interacts with the Remote API to get, post, and list DoltLab database changes and is used primarily by DoltLab's GraphQL service which makes requests to DoltLab's API via DoltLab's Envoy Proxy.

Generally, we implement DoltHub and DoltLab functionality as CRUD style RPC's which, with the exception of DoltLab or DoltHub's database data, will write and retrieve data from the PG server.

Again, nothing too fancy going on with DoltLab's main API. There is some complexity with how it handles asynchronous DoltLab operations for large merges or large file uploads. With DoltHub, for instance, we run separate instances of DoltHub API for processing large or long running asynchronous workloads out-of-band, so that user-facing DoltHub API instances can serve only short-lived, light weight requests. But that's a blog for a different day.

DoltLab GraphQL API Server and DoltLab UI Server

DoltLab's GraphQL server is the data layer API, that provides a uniform interface for DoltLab UI requests and responses that are ultimately headed to DoltLab's API. In front of GraphQL is DoltLab's UI server, a Next.js server using React, which communicates with GraphQL via DoltLab's Envoy Proxy Server.

DoltLab running in a user's browser will interact with the GraphQL API through the /graphql endpoint on DoltLab's Envoy Proxy Server served on port 80. Both GraphQL and DoltLab's UI are the same servers we use for DoltHub, configured slightly differently to produce the UI and functionality differences that differentiate DoltLab and DoltHub.

It was important to us to be able to reuse a lot of our DoltHub code when creating DoltLab, and so far we've succeeded in supporting both products with minimal changes to our frontend code and architecture.

We have written extensively on DoltLab's (DoltHub's) frontend, which includes both GraphQL and the UI server, so I won't spend too much time rehashing that information here. If you want more information about these services though, I encourage you to peruse the following blogs that go into much more detail about them:

- How We Built DoltHub: Front-End Architecture

- Why we switched from gRPC to GraphQL

- Delivering Declarative Data to DoltHub with GraphQL

- Using Apollo Client to Manage GraphQL Data in our Next.js Application

DoltLab File Service API Server

A new service created specifically for DoltLab is it's File Service API, which listens for connections on port 4321. This API enables DoltLab users to upload files for import into their DoltLab databases by storing these files on, and serving these files from, local disk.

This service, though similar sounding to the Remote Data Server, handles local files created with edit-on-the-web or uploaded directly by individual DoltLab users. The Remote Data Server on the other hand, serves only Dolt table-files used by DoltLab databases. Although both services end up serving files from disk, the Remote Data Server is managed by the Remote API in a principled way to serve only database data.

Though DoltHub also supports user uploads and edit-on-the-web, it doesn't at this time use the new File Service API, since DoltHub currently interfaces directly with S3 for serving user uploads and edit-on-the-web content. DoltLab, on the other hand, required a disk-backed implementation, so that DoltLab administrators wouldn't be required to supply cloud resources in order to run DoltLab, so we created the File Service API. Once DoltLab starts supporting the use of cloud resources though, this API can easily be extended to use either a user supplied cloud backing store or local disk.

DoltLab Envoy Proxy Server

Mentioned multiple times already, DoltLab's Envoy Proxy Server facilitates service to service communication for most of DoltLab's services and acts as a simple edge proxy for using DoltLab as well. To keep things relatively simple for DoltLab, we wanted to ship it with just a single proxy server that uses the envoy.tmpl file included in DoltLab's zip folder.

On DoltHub, every service runs with it's own, dedicated envoy proxy server as a sidecar, and we run a fleet of envoy proxies at the edge to load balance requests to DoltHub. For DoltLab, in its current version, this single proxy server works well, and handles a number of tasks for us including authenticating requests, proxying gRPC calls from our node servers to our golang servers, handling CORS, and redirecting requests to the appropriate service cluster.

DoltLab Roadmap 2022

Now that you have a sense of the DoltLab's service stack, here's what's coming down the pipe over the next few months for DoltLab. Please note that this roadmap is tentative and is subject to alteration.

If you're looking to influence DoltLab's roadmap for your use case, the best way to do so is by creating issues or feature requests in DoltLab's issue repository, or by signing your organization up for Enterprise DoltLab Support.

| Feature | ETA |

|---|---|

| Implement internal telemetry/usage metrics | Q1 2022 |

| Disable limited query results | Q1 2022 |

| Deploy production demo instance | Q1 2022 |

| Publish DoltLab Administrator documentation | Q1 2022 |

| Simpler/easier DoltLab version upgrade migration process | Q1 2022 |

| Persistent service logging | Q1 2022 |

| Cypress tests/end-to-end tests | Q2 2022 |

| Allow use of cloud resources | Q2 2022 |

| Implement an Administrator UI | Q2 2022 |

| Provide service metrics for Administrators | Q2 2022 |

| Data Backups | Q2 2022 |

| Open Source DoltHub/DoltLab | Q2 2022 |

| Support Multi-Host Deployments | Q3 2022 |

| Service replication | Q3 2022 |

| Single sign-on for enterprise | Q3 2022 |

Conclusion

If you're using DolLab, or want to start, please don't hesitate to contact us here or on Discord in the #doltlab channel. We are happy to help you out and make sure that DoltLab delivers great value to support your use case.

Stay tuned for more DoltLab updates headed your way soon!