Update: Aaron Boodman, aka “The Guy who Invented the Term Prolly Tree”, had some thoughts about the definition I used here. I’m preserving the original post, but you should definitely read my follow-up post after this one if you want the complete story.

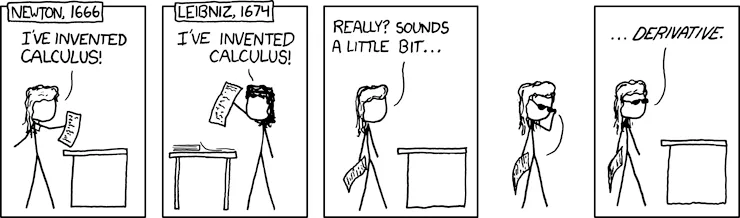

Multiple Discovery refers to when a scientific discovery is made independently by multiple individuals around the same time. The most well-known examples are Isaac Newton and Gottfried Leibniz’s independent invention of calculus, and Charles Darwin and Alfred Russel Wallace’s independent formulation of the theory of evolution.

(Source: https://xkcd.com/626/)

There’s even a hypothesis1 that multiple discovery is the norm, rather than an exception: that invention is an result of social conditions rather than any single “great thinker”, and that once the conditions for a discovery are met, that discovery is often made multiple times in rapid succession.

It’s not always immediately obvious when multiple discovery happens. There’s several reasons why a case of multiple discovery might go unnoticed:

- New inventions may bring new terminology or be used in different fields for different purposes. It may require an intimate understanding of the field to even realize that these separate inventions are actually the same thing.

- Sometimes an invention is not widely known because its creator doesn’t realize they’ve created something novel: the design seemed obvious and intuitive to them.

But if we see multiple discovery happening, it’s a strong indication that the thing being discovered is in enough demand that it’s going to keep being discovered, at least until knowledge of it eventually becomes commonplace. And it won’t stop being discovered even then: calculus was still being discovered in 1994.

This is all relevant because of how it relates to one of our favorite data structures: prolly trees.

We talk about prolly trees a lot, because they’re the novel tool that makes Dolt possible. We didn’t invent them, we have Noms to thank for that. But we did see the value in using them to build the world’s first version controlled relational database.

Except it turns out that Noms isn’t the only group to invent prolly trees. Just like calculus, prolly trees have been independently invented at least four times now, under different names and used in different contexts. And I think that’s worth a closer look.

2015: Noms Invents Prolly Trees#

In 2015, Noms coined the term “Prolly Tree” for their tree data structure with the following really nice properties:

- searchable - They can store ordered data and perform lookups based on ordered keys or offsets.

- history independence - Any data set has a unique representation, regardless of the sequence of operations that led to the current state.

- self-balancing - The tree is probabilistically balanced.

- structural sharing - Storing multiple versions of the data only requires additional storage proportional to their diff: duplicate parts of the data are deduplicated.

- efficient diffing - It’s possible to compare two prolly trees (or two snapshots of the same prolly tree) and identify only the parts of the data that are different between the two trees. The time complexity is logarithmic on the total data size, and is only linear on the size of the diff.

- efficient mutation - Applying changes to the data stored in a prolly tree produces a new prolly tree. Like with diffing, the time complexity is logarithmic on the total data size, and is only linear on the size of the diff.

These properties make prolly trees great whenever you need to version control structured data, or need to reconcile data changes that may be received out-of-order.

I genuinely think prolly trees are novel. But I also think that they’re are a textbook example of something that seems like an natural next step once you understand the prior work that it’s built on: the novelty isn’t in creating something new, but in combining existing math in a novel way. A prolly tree is ultimately nothing more than a Merkle Tree built by recursively applying a Content-Defined Chunker, such as with a rolling hash function.

None of these concepts are new:

- Content-Defined Chunking is a core part of rsync, which was first released in 1996, although the concept itself is even older.

- The first rolling hash function, the Rabin fingerprint, was proposed in 1981.

- Merkle trees were patented in 1979.

If you understand these individual concepts, it’s easy to see how they can be combined to create a tree structure that has all of these properties. It just took a little while to reach a point where prolly trees were needed.

In fact, there’s a lot of prior art of things that are almost prolly trees:

- rsync uses content-defined chunking to split large files, but stores the chunk hashes in a flat list instead of in a Merkle tree.

- BitTorrent magnet links represent the torrent data as a Merkle tree of chunks, but the chunks are fixed-size, not content-defined.

- IPFS uses content-defined chunking to split large files, and then represents these chunks as a Merkle tree, but because the content-defined chunking is not applied recursively, the inner nodes of the tree are prone to shift.

It’s not clear what the exact catalyst was that spurred the creation of true prolly trees. But Paul Frazee has a theory. Frazee is the co-creator and developer of a number of platforms and protocols that we’ve discussed on this blog, including Hypercore and BlueSky’s ATProto. He’s worked with prolly trees, although he calls them “Merkle Search Trees” for reasons that we’ll get to. He posited that a general dissatisfaction with the state of the Web circa 2014 led to renewed interest in p2p networks around that time. And p2p networks would have a lot more need for the properties the prolly trees provide.

He may be up to something with that 2014 theory, since Noms released their design and implementation a year later.

The Noms implementation is what Dolt is built upon, as we touched on in Monday’s blog post celebrating the 10th anniversary of Dolt’s codebase. While Noms may have created the design, DoltHub innovated on it, incorporating improvements such as:

- Using a cumulative distribution function to control the size of tree nodes and better balance the tree.

- Only hashing keys when storing key-value pairs, so that merely updating values can’t change the shape of the tree.

But in a textbook case of multiple discovery, Noms wouldn’t be the only ones to discover the concept, and DoltHub wouldn’t be the only ones to build applications with it.

2019: France Invents Merkle Search Trees (a.k.a Prolly Trees)#

In 2019, two researchers at the French Institute for Research in Computer Science and Automation (abbreviated as Inria) published a paper titled Merkle Search Trees: Efficient State-Based CRDTs in Open Networks. In it, they describe how to design a conflict-free replicated data type (CRDT) that represents a key-value mapping with a Merkle Tree. It has all the same properties as prolly trees, although they call their design “Merkle Search Trees” instead. The authors didn’t seem to be aware of the prior work done by Noms, perhaps because Noms never published a research paper about it.

The implementation outlined in Inria’s paper differs from other implementations in an interesting way: every other approach builds the tree recursively, applying a rolling hash function to each row, with the following steps:

- Use a rolling hash chunker on the underlying (L0) data stream to divide it into chunks.

- Concatenate the hash of each chunk to create a new (L1) data stream.

- Use a rolling hash chunker on the L1 data stream to divide it into chunks.

- Concatenate the hash of each chunk to create a new (L2) data stream.

- …and so on

The implementation described in this paper takes a slightly different approach, hashing the data only once and using the number of leading zeros in the hash of each key to determine how many levels of the tree will split off a new chunk.

This approach has some different tradeoffs: it’s harder to dynamically control chunk sizes, but it relies less on the ordering of the keys in the dataset. This order-independence actually helped inspired our design for vector indexes.

Inria’s design would get later picked up by Bluesky and used in their implementation of ATProto. By combining Merkle search trees with the IPLD data format created by Protocol Labs, ATProto defines a way to uniquely content-address, store, and request and serve large files in a scalable way.

2020: DePaul University Invents Content-Defined Merkle Trees (a.k.a Prolly Trees)#

Not long after, a group of researchers at DePaul University were exploring how to use block-based storage to compress Docker images. Existing methods stored images on a per-file level, and couldn’t deduplicate large files with minor changes between them, or the same file stored at multiple paths. Their paper, Content-defined Merkle Trees for Efficient Container Delivery, describes a way to break down large files into a tree of manageable chunks that could be stored in a content-addressed block store. Their design is nearly identical to the Noms design.

Their paper would draw the attention of XetHub, who would use it to build a Git plugin designed to replace Git LFS. XetHub would make their own improvements that are very reminiscent of the improvements that we made at DoltHub, including:

- Using multiple rolling hash functions with different seeds in order to the normalize the size of tree nodes and better balance the tree.

- Content-aware hashing for different data types, including only hashing keys when storing key-value pairs, so that merely updating values can’t change the shape of the tree.

They then published their results in a 2023 paper titled “Git is For Data”… which sounds a lot like Dolt’s tagline:

(Source: our front page)

Then in 2024 XetHub got acquired by HuggingFace, and their Git plugin is now used by HuggingFace to store and distribute large database files in their repos, similar to how DoltHub works as a database repository.

We’ve been watching XetHub closely and cheering them along on their journey: it was really cool to see how closely their development mirrored ours. The biggest difference between us and them is the use cases we target: XetHub is mainly used to manage and share data for local use, while Dolt is mainly used as a live database server (although Dolt has a local command line workflow too).

All of which is to say: hey HuggingFace, if you’re reading this, we’ve been developing your storage model longer than any other extant company. It runs in our veins. Let’s chat.

The End?#

That’s the end of the timeline. To the best of our knowledge, no one has independently invented prolly trees / Merkle search trees / content-defined Merkle trees since.

But that’s three inventions. At the start of this I said there were four. Where’s the fourth?

On Monday’s post we claimed that Noms was the world’s first implementation of a prolly tree. Well, it turns out we were wrong.

Let’s rewind the clock all the way back to 2009.

2009: Bup Invents Prolly Trees (But Doesn’t Name it Anything)#

This is, as far as I can tell, the earliest recorded use of a prolly tree. bup is a tool for storing incremental file system backups within a Git repo, originally a solo project by Avery Pennarun. Since Git has issues with large files, bup’s workaround is to split large files up into a tree of Git objects by recursively applying a rolling hash chunker. This is a textbook example of a prolly tree, and it’s been in bup’s codebase since the beginning. But bup didn’t give a name to the technique, possibly because Pennarun didn’t realize that he had created something novel. Perhaps he saw it as the natural solution to the specific problem he was facing.

And he did this in 2009, six years before Noms. I’m not aware of any other examples of prolly trees being invented in that six year period, but it wouldn’t surprise me if they were. It’s fully possible that there are even more people who have independently discovered this data structure, and are using it in their own work with little fanfare. It’s not that wild to believe that something could have been invented five times instead of just four.

Comparing each of these independent discoveries, it’s easy to see how none of them knew about each other:

- The bup implementation was created to solve a specific need and was never named or promoted, so none of the future inventors were aware of it.

- Noms was a private company and never published any research papers. Every subsequent inventor was a public research institution.

- Inria’s design was published in a symposium on distributed systems, whereas the DePaul researchers were trying to optimize containerized application storage, two fields of computer science with relatively little overlap.

It’s mostly by chance that I even became aware of each of these parallel inventions, making it more plausible that there are others. It’s something I’m on the lookout for now, and who knows where I’ll spot it next.

A Tree By Any Other Name#

Each group also chose their own name for what they discovered, or didn’t name it at all. This poses an obvious question: at some point in the future, when the use of this data structure becomes institutional knowledge, we’ll have standard terminology for it. But what name will that be?

- Prolly Tree?

- Merkle Search Tree?

- Content-Defined Merkle Tree?

- Or something else?

At DoltHub we call them prolly trees because that’s what Noms called them. And we’re going to continue calling them that because it predates every other name that we know of. And speaking as a member of the DoltHub team, I’m hoping the name sticks because that’s the one that we’ve already invested so much messaging around.

I guess we’ll find out eventually. In the meantime, we’re going to keep building cool stuff on top of it. If you like building cool stuff with novel data structures, consider joining our Discord server and chatting with us about it. Or if you think like we do that version control is important for all your data, come chat with us about how prolly trees and Dolt can help with your workflow.

Footnotes#

-

Lubowitz JH, Brand JC, Rossi MJ. Two of a Kind: Multiple Discovery AKA Simultaneous Invention is the Rule. Arthroscopy. 2018 Aug;34(8):2257-2258. doi: 10.1016/j.arthro.2018.06.027. PMID: 30077243. ↩