Agents need tests. It’s even in the Claude Code documentation.

If you frequent this blog, you’ll know Claude Code and I are bros. I don’t just trust the documentation. I trust my lived experience with the tool. Claude Code works better with tests. I even wrote about it in my popular Claude Code Gotchas article.

Dolt has a little known testing feature called Dolt CI. The CI stands for Continuous Integration. Dolt CI allows you to write complex queries and assertions as tests. Last week, we announced we’d made Dolt CI more command line friendly. We wanted Dolt CI to work better with agents. Agents can run Dolt CI tests as they make changes on clones or branches of your database. These tests will guide the agent to do more uninterrupted, correct work on your database, just like your unit tests do for code.

Agents need tests. Dolt is the only SQL database with a built-in test engine. Dolt is the database for agents.

Sound familiar? Agents need branches. Dolt is the only SQL database with branches. Dolt is the database for agents.

We think we’re on to something here. Bring on your agentic database use cases! Let’s explore testing and agents a bit further.

Tests Help Claude Code#

I asserted that agents work better with tests and cited the Anthropic documentation and my lived experience. But why do agents work better with tests?

Before I knew about Claude Code, I wrote an article asking whether coding agents were one innovation away from awesome. All I had used was chat-based coding like in Cursor. The constant back and forth did not save me any time coding. I wasn’t using a real coding agent yet. But after I discovered Claude Code, I was far more productive. Claude Code could work for minutes without needing my input, unlocking an asynchronous workflow. More importantly, the code it generated was good.

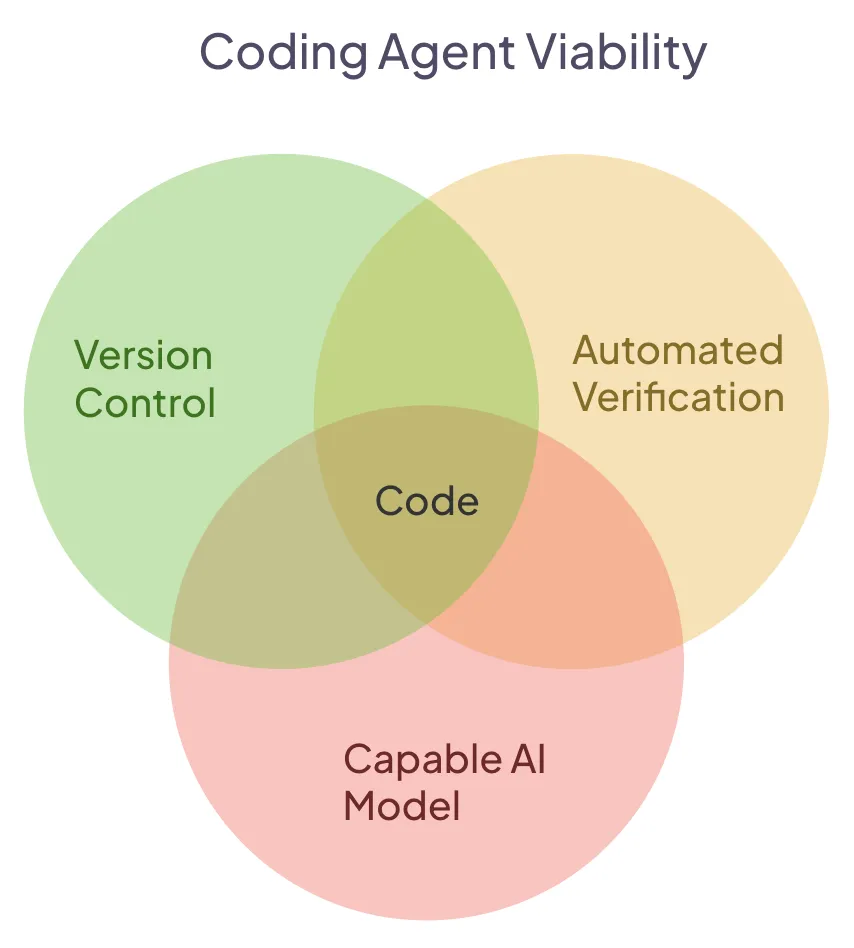

Upon reflection, I think the innovation that made coding agents awesome was pretty simple. It was looping until you get a verifiably correct result. This allowed agents to work for much longer without human intervention. A human can go do other things while an agent or multiple agents work in the background. This looping behavior combined with a capable AI model and version control, features that existed in the chat-based coding era, combined to usher in the agentic coding era.

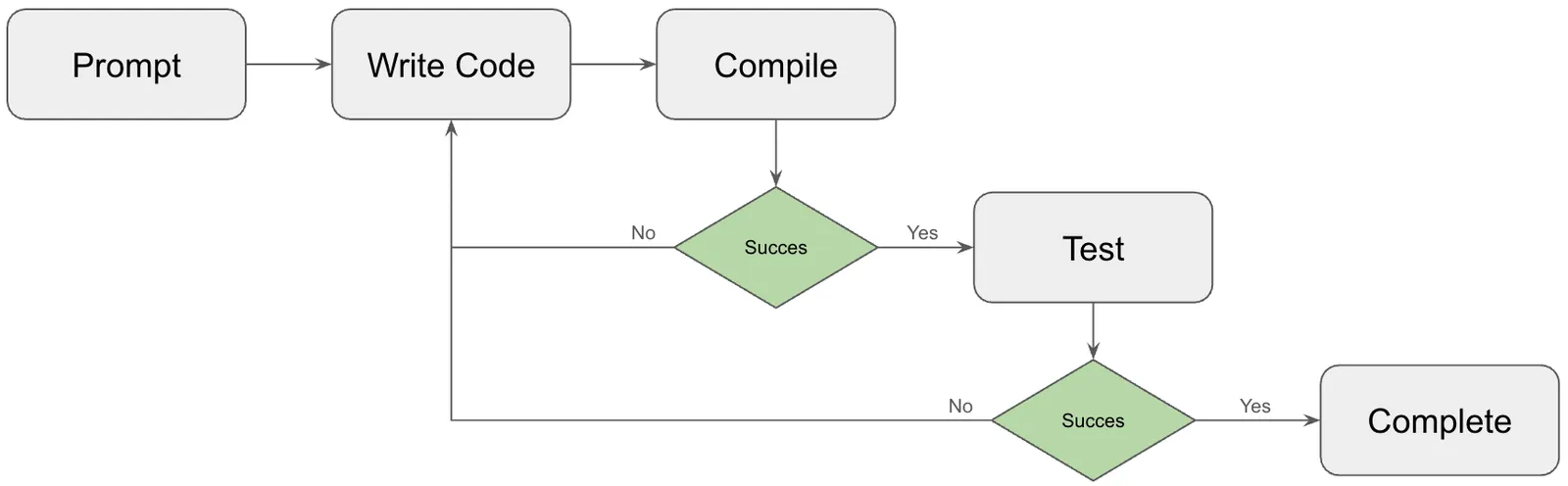

The thing about most code that makes it a perfect use case for agents is you get multiple layers of verifiability: compilation, unit tests, integration tests, and all other types of tests. Claude code will even manual test if it can. The agent follows something like the following pattern:

- It writes some code it thinks might work.

- It tries to compile the code.

- If compilation succeeds, skip to step 8.

- If compilation fails, proceed to step 5.

- It inspects the compilation errors.

- It fixes the compilation errors with new code.

- Return to step 2.

- It runs the tests.

- If tests succeed, skip to step 14.

- If tests fail, proceed to step 11.

- It inspects the test failures.

- It fixes the tests failures with new code.

- Return to step 2.

- It tells the user the task is complete.

This sequence works because the agent is getting multiple layers automated feedback from the system telling it whether what it did was correct. The more layers of tests, the larger the feedback loops. The agent can loop hundreds of times testing hypotheses without the user involved, greatly improving the probability of a correct answer.

Agents Need Tests#

Extending this lesson to other agentic workflows, the more automated verification you have, the more autonomously agents will work and the greater the probability of a successful result. So, a key to adopting agents in workflows outside of code is going to be automated verification testing. You can get away with human review, or “human in the loop” as we say in AI speak, but this is going to trap you in the Cursor chat-based coding model, not pull you into full agent mode. Agents need tests.

Agents need verify actions on everything. Make a write, verify, commit if verify succeeds, return to the beginning if verify fails. The more layers of verification the better the agent will do.

Which workflows outside of code have a lot of verification testing? I’m a software engineer by trade so I’m not super familiar with a lot of business workflows. But what I’d say is that every domain outside of software engineering has struggled with automated testing. When I ran the border network at Amazon, network engineers often manually changed configuration on the routers, with only an “over the shoulder” review. We struggled figuring out workflows that integrated automated testing.

I would look to places where agents are already working for answers. Robotics has real world verification testing. The robot falls down or the other car does something you don’t expect. Algorithmic asset trading has checks to make sure orders are within reasonable bounds. Customer Support has verification flows at least for things like refunds. I’m sure there are other examples and I’d love to hear them.

So, a challenge for the agentic age is going to be adding automated verification testing to systems that currently don’t have it. This was always a good idea but now companies can automate much more deeply if they have automated verification. Testing enables agents to do more complicated stuff.

What’s so Hard about Tests?#

Even in code, a relatively verifiable domain, testing is a bit of an art. This is after the countless hours engineers have spent making code and frameworks testable. What behavior should be asserted in tests? How do you test user interface? When should you run the full test suite because it takes so long? It’s no surprise to me that testing is lacking from other hard to verify domains.

In other domains, very few problems have a verifiably correct answer. Is this marketing copy good enough? Is this purchasing plan going to be optimal? Is this resume the best it can be? Agents will produce work but often, only a human can tell if it is good enough.

Moreover, even thinking about constraints on solutions is hard. Marketing copy should have sentences under 10 words. Always purchase at least one of these. A resume should not be longer than a printed page. Are these workable verifiable constraints for the questions above? If so, they can be codified as tests. These will help the agent loop a bit longer but the task will still require human review.

Lastly, once tests are implemented, constraints can result in false positives. Some great marketing copy may have 11 words in a sentence and your tests will fail. An agent will reject that great solution and move on to another. Humans interaction with the same system may be frustrated with these additional constraints.

Tests are Better with Version Control#

There’s an additional underlying problem with testing. If you have tests, what do you do when the tests fail? In many systems, you don’t have a “draft” or “sandbox” mode to make changes. You make changes and the changes are “live”. So, after you go live, let’s say your tests run and fail. What do you do? The changes are already made. If you can, you attempt to roll back the change or maybe you roll forward and fix what you broke. In this case, testing may help you identify your error sooner but it won’t prevent the error in the first place. So testing is treated as burdensome. Code has version control which allows for easy branching, diffs, rollbacks, and merges making testing far less burdensome and more worthwhile.

The good news is version control is being added to more domains. We here at DoltHub added version control to SQL databases six years ago and we think agents need version control just as much as agents need tests.

Tests for Databases#

In addition to version control, we’re making SQL databases easier to test. Not only is Dolt the world’s first version controlled SQL database, Dolt also is the world’s first database with a built-in test engine. Both these features open up a world of testing that is perfect for agents. Databases end up being the backing store for most applications you want agents to interact with. If you version control and test the database, you have the primitives to version control and test the application on top of the database. This is exciting news for agents.

Tests have traditionally sat outside your database. There is a tool called Great Expectations that is a tool to test databases. We’ve written about Dolt and Great Expectations before, arguing that test functionality is less useful without version control. What do you do when tests fail? Adding a testing engine into Dolt itself allows change isolation on a branch with validation testing to prove the branch is correct. If not, don’t merge the branch.

Dolt is the only database with built-in tests. We call the feature Dolt CI, standing for Dolt Continuous Integration. This feature logically follows from version control functionality. You can run tests locally. Tests also run on DoltHub if you want a decentralized collaboration workflow.

We think Dolt is strategically positioned to be the database for agents. We didn’t build it for agents. We built it for data sharing. It’s just a happy accident that Dolt is perfect for the agentic wave that is coming. Agents need branches. Agents also need tests. Dolt is the only database with both of those.

Conclusion#

Agents need branches. Agents need tests. Dolt has branches and tests. Dolt is the database for agents. Have an agentic use case in mind? We’d love to hear about it. Come by our Discord and share.