Dolt is the world’s first SQL database which enables users to branch and merge data. The concept of a version controlled database is foreign to most developers, and so we talk about it a lot. Why is a version controlled database useful, and how can you use it to make your life easier?

There are many facets to this, but today we’ll talk specifically about how versioning can allow you to verify the quality of your data in ways that aren’t possible with other databases.

The Basics#

SQL Databases have mechanisms to ensure data correctness today. These are in the form of Unique Keys, Data Constraints, Foreign Key Constraints, and so on that you are likely familiar with. These are table stakes in the relational SQL universe that have been around for decades.

These mechanisms serve an important purpose, which is to ensure you have enough structure within your data to reliably build an application on it. You want to have confidence that when you request a row of data, all of the values are not NULL, and that if you update one table which depends on values in another table, the expected value is actually there. Having these assurances has allowed applications to be built for years, many of which are foundational infrastructure to large corporations and governments and will be for years to come.

To give a more specific example, I’m going to harken back to a previous blog post where I built Battleship on Dolt. You can see a couple of data quality mechanisms in one of the board tables:

CREATE TABLE `blue_board` (

`x` enum('A','B','C','D','E','F','G','H','I','J') NOT NULL,

`y` int NOT NULL,

`content` char(1) NOT NULL DEFAULT ' ',

PRIMARY KEY (`x`,`y`),

CONSTRAINT `blue_board_chk` CHECK (((`y` >= 1) AND (`y` <= 10)))

)This models what the blue player sees, which is a 10x10 board accessed by codes like A4, which is modeled as x and y columns. The content is a single character which we’ll talk more about in a moment.

There are a couple of data quality mechanisms seen here. The enum ensures that the x position of the board is confined to a specific set of letter values. The PRIMARY KEY designation ensures that we only have one value for each x,y pair. Everything must not be NULL. Having these assurances means that the application reading them can have confidence that the data is sane. For example, I don’t need to validate that y is not over 10 in my application code because the database constrains the data to ensure it’s always <= 10. It’s impossible to write a value which would violate that rule, so we can have confidence that it’s impossible to read such a value.

Gaps#

As stated above, these are table stakes mechanisms for any SQL database. And yet, we very regularly see applications requiring additional constraints above and beyond what you can support with native SQL.

In the battleship schema described above, there is nothing from preventing me from having 10 destroyers on the board. The content column is a single character. They represent what is at that position on the given board. The application code outlines what is permitted

// Ship types and their properties for standard Battleship game

const (

// Ship type characters used on the board

CARRIER_CHAR = 'C'

BATTLESHIP_CHAR = 'B'

CRUISER_CHAR = 'R' // <-- We'll use that one.

SUBMARINE_CHAR = 'S'

DESTROYER_CHAR = 'D'

// Hit and miss markers

HIT_CHAR = 'X'

MISS_CHAR = 'O'

EMPTY_CHAR = ' '

)We could have added a constraint in the data schema which ensured those 8 characters were the only ones permitted in the content column of the database. Unfortunately this is of limited usefulness when we consider all the other ways we could insert bad data in this table.

To step back a bit, placing the length 3 Cruiser ship, on the board we need to add 3 rows to the database with content R. Those rows specifically need to be in a line on the board. Example:

select * from blue_board where content = 'R';

+---+---+---------+

| x | y | content |

+---+---+---------+

| A | 2 | R |

| A | 3 | R |

| A | 4 | R |

+---+---+---------+There is no SQL mechanism to tell you that is a valid state of the board vs the following which would be a diagonally placed ship, obviously illegal:

select * from blue_board where content = 'R';

+---+---+---------+

| x | y | content |

+---+---+---------+

| A | 2 | R |

| B | 3 | R |

| C | 4 | R |

+---+---+---------+In order to ensure this never happens application code must be implemented and tested. Ultimately SQL databases can only ensure so much application specific structure is enforced. People who write applications against traditional SQL databases are pretty familiar with this reality. There are frameworks such as Great Expectations which exist to help you keep your data clean by looking at the state of the database at a given time and ensure it has more subtle application specific sanity checks of the data.

The Power of a Query#

While there isn’t a built-in way to ensure that the Cruiser’s position on the table is valid, we can write a query which tells us if it is or not. Here is a query to verify that the Cruiser, R, is placed on the board correctly:

-- 1 = valid cruiser placement, 0 = invalid

SELECT

(SELECT COUNT(*) FROM blue_board WHERE content = 'R') = 3

AND (

-- Vertical line: same x, three consecutive y's

EXISTS (

SELECT 1

FROM blue_board

WHERE content = 'R'

GROUP BY x

HAVING COUNT(*) = 3 AND MAX(y) - MIN(y) = 2

)

OR

-- Horizontal line: same y, three consecutive x's.

-- ENUM('A'..'J') coerces to its ordinal with (x+0)

EXISTS (

SELECT 1

FROM (

SELECT (x + 0) AS xi, y

FROM blue_board

WHERE content = 'R'

) r

GROUP BY y

HAVING COUNT(*) = 3 AND MAX(xi) - MIN(xi) = 2

)

) AS cruiser_valid;This query takes some liberties, such as coercing the ENUM values, and the MAX - MIN is a little bit tricky, so it may not be code that stands the test of time. The point is that, at the start of the game, when all the ships have been laid out on the board, this query should pass. If it doesn’t pass, then your application code is incorrect because it put the Cruiser in an illegal position. You could easily create tests for the other four types of ships that can be added to the board, and there you have it: a Test Suite.

We recently announced the availability of the dolt_tests table, which allows you to save such queries. You can create tests by inserting directly into the table, then committing the results. That query above is long, so I’ll simplify as {TEST QUERY} here:

mydb/main> insert into dolt_tests values ("cruiser start", "game_start_positions", "{TEST QUERY}", "expecting_single_value", "==", "true");

mydb/main*> \add dolt_tests

mydb/main*> \commit -m "Add cruiser start test"One of the nice features is that you can group tests together by giving a group as the second column in the insert. You can gather those 5 queries which verify ships are in legitimate positions into a group, game_start_positions, and you could run them all with the following code:

mydb/main> SELECT test_name,test_group,status FROM dolt_test_run("game_start_positions");

+----------------------+----------------------+--------+

| test_name | test_group_name | status |

+----------------------+----------------------+--------+

| carrier start | game_start_positions | PASS |

| battleship start | game_start_positions | PASS |

| cruiser start | game_start_positions | PASS |

| submarine start | game_start_positions | PASS |

| destroyer start | game_start_positions | PASS |

+----------------------+----------------------+--------+The dolt_tests table is like embedding your unit tests in your source code, but for your data. You are codifying executable queries which should validate your data at a point in time. This can be used for all sorts of validations like ensuring a dataset has a reasonable statistical spread, or asserting that certain business rules hold true as new data arrives. Since tests live alongside the data itself, they provide a transparent, version controlled, and repeatable mechanism for guaranteeing data quality throughout the lifecycle of your project.

Now with Version Control#

Tests of state at a particular point in time are useful, but we can do one better. Version Control unlocks another testing ability which is to enable you to verify how your data has changed.

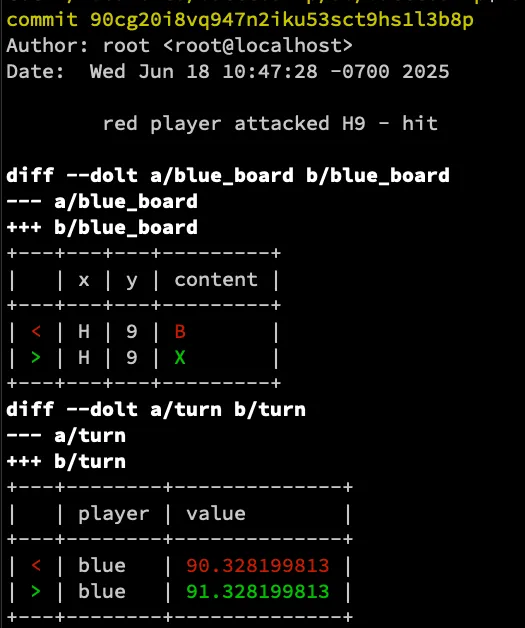

Let’s go back to the Battleship example. During the game, a commit is made to the database to complete each players move. What a move looks like involves two updates. First, we update the board (we’ll stick to blue_board still) by updating one and only one row. The opponent says strike A1, if if it’s a hit we update from the ship identifier to the X character. If it’s a miss we insert O. Second, we update the turn table, which is a table that has a limit of two rows. Each row’s value starts as a number between 0 and 1, and serves as a way to roll the dice at the beginning of the game. Each move increments the value of the other player by 1. That is all to say that this is what the difference looks like for the 90th move into the game. The Red player has hit the Blue player’s battleship:

We can used the dolt_diff table function to inspect the difference of the latest change:

> SELECT

(total_diffs = 1

AND modified_diffs = 1

AND modified_to_X = 1) AS valid_update

FROM (

SELECT

COUNT(*) AS total_diffs,

SUM(CASE WHEN diff_type = 'modified' THEN 1 ELSE 0 END) AS modified_diffs,

SUM(CASE WHEN diff_type = 'modified' AND to_content = 'X' THEN 1 ELSE 0 END) AS modified_to_X

FROM dolt_diff("HEAD~1", "HEAD", 'blue_board')

) t;

+--------------+

| valid_update |

+--------------+

| true |

+--------------+We can also verify that the turns table was updated in the way we would expect - increase blue in value by 1.0:

> SELECT

-- cope with float equality comparison by allowing for a very small delta.

(blue_mod_rows = 1 AND ABS(blue_delta - 1.0) < 1e-9) AS valid_turn_update

FROM (

SELECT

SUM(CASE WHEN diff_type = 'modified' AND from_player = 'blue'

THEN 1 ELSE 0 END) AS blue_mod_rows,

SUM(CASE WHEN diff_type = 'modified' AND from_player = 'blue'

THEN (to_value - from_value) ELSE 0 END) AS blue_delta

FROM dolt_diff("HEAD~1", "HEAD", 'turn')

) t;

+-------------------+

| valid_turn_update |

+-------------------+

| true |

+-------------------+Each of the queries above could constitute stand alone tests which you could store in the dolt_tests table. Or you could perform them as one large query. You could extend them each to account for the other types of legitimate moves such as the blue player missing a shot, or red winning the game.

The Battleship game was written to take advantage of Dolt commits for each player move. As a result, the history of any game can be inspected. You can look at any specific commit, and verify that the data was transformed in the way you expected your application to behave.

Bringing it all together, I’ll add that last query to the dolt_tests table, and break the data.

mydb/main> INSERT INTO dolt_tests VALUE ('blue_turn','',

"SELECT

-- cope with float equality comparison by allowing for a very small delta.

(blue_mod_rows = 1 AND ABS(blue_delta - 1.0) < 1e-9) AS valid_turn_update

FROM (

SELECT

SUM(CASE WHEN diff_type = 'modified' AND from_player = 'blue'

THEN 1 ELSE 0 END) AS blue_mod_rows,

SUM(CASE WHEN diff_type = 'modified' AND from_player = 'blue'

THEN (to_value - from_value) ELSE 0 END) AS blue_delta

FROM dolt_diff('HEAD~1', 'HEAD', 'turn')) t",

"expected_single_value","==","true");

Query OK, 1 row affected (16.15 sec)

mydb/main> \commit -A -m "Create a test to validate turn value update"Let the game play, then after an arbitrary Red move, run the test:

mydb/main> select test_name,status,message from dolt_test_run('blue_turn');

+-----------+--------+---------+

| test_name | status | message |

+-----------+--------+---------+

| blue_turn | PASS | |

+-----------+--------+---------+

1 row in set (0.00 sec)

mydb/main> update turn set value = 42.0 where player = 'blue';

Query OK, 1 row affected (0.01 sec)

mydb/main*> \add turn

mydb/main*> \commit -m "Break the turn value for blue"

commit rbjdi0a0ifj527u41baqepuasurn7kk3 (HEAD -> main)

Author: macneale <neil@dolthub.com>

Date: Thu Oct 02 11:45:48 -0700 2025

Break the turn value for blue

mydb/main> select test_name,status,message from dolt_test_run('blue_turn');

+-----------+--------+------------------------------------------------------------------+

| test_name | status | message |

+-----------+--------+------------------------------------------------------------------+

| blue_turn | FAIL | Assertion failed: expected_single_value equal to true, got false |

+-----------+--------+------------------------------------------------------------------+

1 row in set (0.00 sec)We know that last commit was bad, because it failed it’s test. To get your data back into

a healthy state, reset --hard that thing away:

mydb/main> \reset --hard HEAD~1

select test_name,status,message from dolt_test_run('blue_turn');

+-----------+--------+---------+

| test_name | status | message |

+-----------+--------+---------+

| blue_turn | PASS | |

+-----------+--------+---------+

1 row in set (0.00 sec)This is the magic of a version control enabled SQL database. This is the magic of Dolt.

Continuous Integration#

Up until now, we talked about Dolt’s the ability to test your database state at a point in time, and as it changes over time. You can think of this like Unit testing on your local database. Continuous Integration was added to DoltHub last year, and it goes a step further.

With CI, we clone your database, and mess with it in isolation. Any number of queries can be run as part of the validation. These typically modify the data in some way, then verify the updates were what you expected.

Similar to GitHub Actions, Dolt CI runs on a pull request in Dolthub.com. Rather than think of this level of testing as Unit Test, it’s more of a sandbox test that can be run in a trusted place. This allows you to review the data changes made by another user and ensure that your validation passes before you approve the request.

The critical piece here is the changes can be destructive because you will be throwing away the clone after the validation takes place. On balance, it’s important to call out that dolt_tests can’t perform updates.

In our Battleship game, the main branch never has any game tables on it, if the application is behaving correctly. Specifically, the red_board,blue_board, and turn tables never exist on main. We can write a query which verifies that:

SELECT

COUNT(*) = 0 AS all_missing

FROM information_schema.tables

WHERE table_schema = DATABASE()

AND table_name IN ('red_board', 'blue_board', 'turn');

+-------------+

| all_missing |

+-------------+

| true |

+-------------+In an integration test scenario, your application would run a full game simulation on the CI clone, then verify that the main branch didn’t get polluted with the game tables. To verify that, run the query above. This isn’t full possible on Dolthub.com yet, because we don’t allow for arbitrary code execution. The idea is still the same: run your code, and then perform exhaustive tests on your data to verify your application is behaving as expected.

Agentic#

We’ve talked a lot about AI Agents and Dolt, and we really believe Dolt’s version control and testing are essential for real usage of Agents on databases. There are numerous examples of AI Agents performing destructive actions. Here’s one, but it’s not the only one. The simple response to this would be to just forbid your agents from performing writes by not giving them the keys to your database. If we limit ourselves to that degree, all the AI hype we are currently suffering through will be just that: hype. In order to get real work done by agents, they are going to need to be able to write.

Dolt’s testing abilities described earlier are exactly what Agents need. Agents can be made wise to the dolt_tests table so that they can validate their changes. This would be equivalent to expecting your coding agent to run unit tests locally to ensure changes work. When the changes are ready, the agent can create a Dolt pull request. That pull request can have CI tests to ensure the agent’s changes don’t break expectations of the database.

Conclusion#

Dolt offers many options to help you build a high degree of confidence in the quality of your data. We have the table stakes of standard SQL databases, sure, but only Dolt has the ability to track tests as versioned entities and the ability to look at historical changes over time.

There are other things we could build, such as automatically running tests before committing, or dolt bisect to find where your data deviated from the expectations of tests. We could also add capabilities to dolt_test_run() to target non-HEAD changes. What would be most useful to you? Come to our Discord server and let us know how Dolt can better solve your problems!