Writing yacc parsers with golang: tips and tricks

This article discusses the technical details of writing yacc grammars / parsers using the goyacc tool. It's part of our our technical Golang blog series. We publish a new article in the series every 3 weeks.

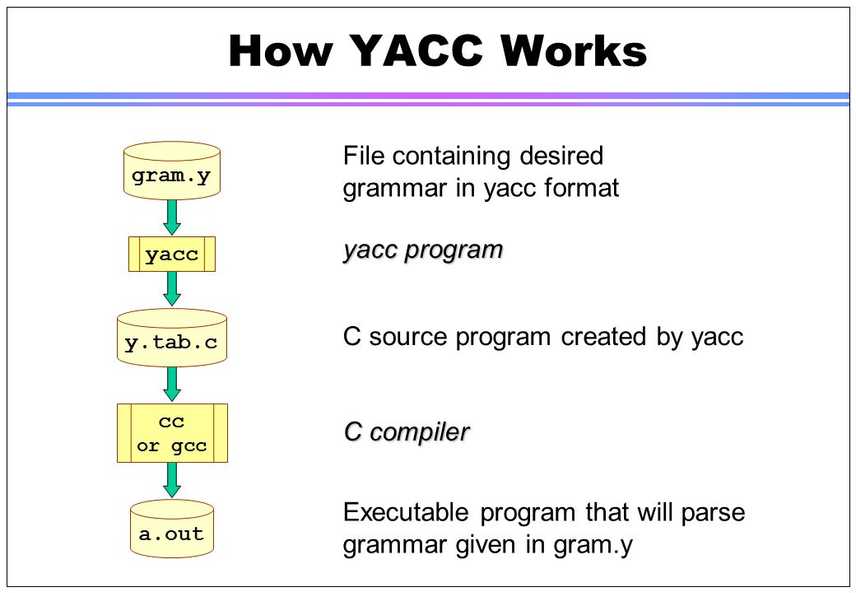

We're writing Dolt, a version-controlled SQL database. The database needs to

parse SQL query strings into a structured form the database can execute. Our solution to this

problem, like most other databases, is to use yacc, which is a form of compiler that turns a

simple grammar into a parser. Go has its own version of this tool, goyacc, which mostly works the

same as vanilla yacc but is better tuned for generating parsers in go.

This blog isn't an introduction to yacc grammars generally, since that's covered pretty well in many other places. Rather, we want to provide some tips and tricks for making yacc work well with go, highlighting some of the issues we've faced and how we overcame them.

The basics: the grammar file and the goyacc command

yacc grammars are a compact way to represent a structured language, which is why you find them all

over the place. Humorously, YACC stands for "Yet Another Compiler-Compiler", and takes its place in

the computer science pantheon alongside other tools that didn't expect to catch on (like YAML, "Yet

Another Markup Language"). As I said, it's not our intention to write a beginner's guide to

yacc. One of the advantages of using a tool like this is that it's 50 years old and there are no

shortage of tutorials to help you along.

But as an example, here's a simple grammar rule defined in yacc syntax:

algorithm_opt:

ALGORITHM '=' UNDEFINED

{

$$ = string($3)

}

| ALGORITHM '=' MERGE

{

$$ = string($3)

}

| ALGORITHM '=' TEMPTABLE

{

$$ = string($3)

}A full yacc file contains a bunch of tokens (like ALGORITHM above), an entry point rule definition

where parsing begins, and then a series of rules (like algorithm_opt) which can contain references

to tokens or to other rules. You put all these rules and definitions into a .y file, then invoke

goyacc on it to generate a parser for the grammar. They also typically begin with a quoted block

of code that gets put verbatim into the generated parser. Ours starts like this:

%{

package sqlparser

import "fmt"

import "strings"

func setParseTree(yylex interface{}, stmt Statement) {

yylex.(*Tokenizer).ParseTree = stmt

}

...

%}The goyacc tool is provided by the golang.org/x/tools/cmd/goyacc package, which you can install

with go install. Once you have it on your $PATH, you run it on a .y file like so:

% goyacc -o sql.go sql.ysql.y is the name of our SQL grammar, and sql.go is the go parser file we want it to

generate. If you want to try this yourself, you can do so on our fork of

Vitess in the go/vt/sqlparser directory. We check the

generated sql.go file into source control.

Sounds easy right? Well, it usually is, at first. But if you're anything like us, you're likely to run into problems pretty fast.

The terror of yacc: the shift/reduce conflict

yacc grammars have a pretty nasty common problem called a shift/reduce conflict. Essentially this

means that your grammar is constructed in such a way that the state machine parser generated can't

decide unambiguously which token to expect next, and you have to disambiguate it. This is best

illustrated with an example.

aliased_table_options:

as_of_clause_opt index_hint_list

{

$$ = &AliasedTableExpr{AsOf: $1, Hints: $2}

}

| as_of_clause_opt as_opt table_alias index_hint_list

{

$$ = &AliasedTableExpr{AsOf: $1, As: $3, Hints: $4}

}

as_of_clause_opt:

{

$$ = nil

}

| as_of_clause

{

$$ = $1

}

as_of_clause:

as_of_point_clause

{

$$ = $1

}

| between_times

{

$$ = $1

}

| between_versions

{

$$ = $1

}

| all_times

{

$$ = $1

}

| all_versions

{

$$ = $1

}

as_opt:

{ $$ = struct{}{} }

| AS

{ $$ = struct{}{} }

index_hint_list:

{

$$ = nil

}

| USE INDEX openb column_list closeb

{

$$ = &IndexHints{Type: UseStr, Indexes: $4}

}

| IGNORE INDEX openb column_list closeb

{

$$ = &IndexHints{Type: IgnoreStr, Indexes: $4}

}

| FORCE INDEX openb column_list closeb

{

$$ = &IndexHints{Type: ForceStr, Indexes: $4}

}This set of rules is intended to parse options related to a table in a SELECT statement. Here's a

statement that uses all these optional elements:

select 1 from

t1 as of '2019-01-01'

as myTable

use index (`By`)

where b = 1;But yacc can't generate a parser guaranteed to be correct for this grammar. When we run it we see these dreaded words:

% goyacc -o sql.go sql.y

conflicts: 2 shift/reduceThis means that the parser it just generated will be ambiguous and not generate correct results for

some inputs. To figure out why, we need to dig into the debug output for the tool, which is captured

in a file called y.output. If we search in that file for "shift/reduce", we find this:

2203: shift/reduce conflict (shift 1184(0), red'n 1144(0)) on AS

state 2203

aliased_table_name: table_name.aliased_table_options

aliased_table_name: table_name.PARTITION openb partition_list closeb aliased_table_options

as_of_clause_opt: . (1144)

FOR_SYSTEM_TIME shift 2552

FOR_VERSION shift 2553

AS shift 1184

VERSIONS shift 2554

PARTITION shift 2544

. reduce 1144 (src line 5680)

aliased_table_options goto 2543

as_of_clause goto 2546

as_of_point_clause goto 2547

between_times goto 2548

between_versions goto 2549

all_times goto 2550

all_versions goto 2551

as_of_clause_opt goto 2545This is pretty hard to read, but it gets easier once you've stepped on this rake a few dozen times:

- The numbers are state numbers in the giant state machine that implements the parser

shiftmeans consuming another tokenred'nis thereducepart of ashift/reduceconflict, basically meaning resolving a rule and returning a result to an earlier layer in the stack. It's what's denoted by..

So the above output is telling us there is ambiguity in the grammar. When in the

aliasted_table_options rule looking at the token AS, it can't decide if it's part of the clause

AS OF '2020-01-01', or the clause AS myTable. This is a limitation of yacc parsers: they belong

to a class of parsers known as look-ahead left-to-right (LALR) parsers. In particular, yacc parsers

are LALR(1) parsers, meaning they can only consider a single next token to decide which state to go

to next.

So what do you do when confronted a shift/reduce conflict?

Reducing rule nesting to eliminate ambiguity

The parsers generated by yacc very closely mirror the structure of the grammar rules they were given. This means that it's often the case that you can eliminate grammar ambiguities by "inlining" rules into a parent rule where necessary. For example, this rule is a conflict-free version of the one above:

// All possible combinations of qualifiers for a table alias expression, declared in a single rule to avoid

// shift/reduce conflicts.

aliased_table_options:

index_hint_list

{

$$ = &AliasedTableExpr{Hints: $1}

}

| as_opt table_alias index_hint_list

{

$$ = &AliasedTableExpr{As: $2, Hints: $3}

}

| as_of_clause index_hint_list

{

$$ = &AliasedTableExpr{AsOf: $1, Hints: $2}

}

| as_of_clause as_opt table_alias index_hint_list

{

$$ = &AliasedTableExpr{AsOf: $1, As: $3, Hints: $4}

}In this version of the rule, there's no ambiguity in the grammar because we've replaced

as_of_clause_opt with as_of_clause, and then generated every combination of correct

constructions in a single rule. This gives us twice as many patterns to match in the rule itself.

By a very large amount, this has been the single most useful trick in our arsenal to eliminate

shift/reduce conflicts. It comes up often in grammars like SQL, where there are many optional

clauses. yacc often doesn't like two optional rules (rules that can match empty input) in a row,

especially when they begin with the same keyword. In the original formulation of the rule, it's

impossible for the parser to decide between AS OF .. and AS mytable because they're both

optional, so our solution involves making one of the rules not match empty input and then

enumerating all the combinations manually.

Tricks in the lexer

yacc grammars don't provide lexing, also called tokenization. Lexing is the process of breaking the

input string into discrete tokens, which then match the rules defined in the grammar. You have to

write one yourself. This isn't usually super challenging. Basically it involves writing a function

called Scan() that returns the next token, usually represented as an int generated by the

parser. Here's what ours looks like, in part:

func (tkn *Tokenizer) Scan() (int, []byte) {

tkn.skipBlank()

switch ch := tkn.lastChar {

case isLetter(ch):

tkn.next()

if ch == 'X' || ch == 'x' {

if tkn.lastChar == '\'' {

tkn.next()

return tkn.scanHex()

}

}

if ch == 'B' || ch == 'b' {

if tkn.lastChar == '\'' {

tkn.next()

return tkn.scanBitLiteral()

}

}

return tkn.scanIdentifier(byte(ch), false)

case isDigit(ch):

typ, res := tkn.scanNumber(false)

if typ != LEX_ERROR {

return typ, res

}

// LEX_ERROR is returned from scanNumber iff we see an unexpected character,

// so try to parse as an identifier

// Additionally, if we saw a decimal at any point, throw the LEX_ERROR we received before

for _, c := range res {

if c == '.' {

return typ, res

}

}

typ1, res1 := tkn.scanIdentifier(byte(tkn.lastChar), false)

return typ1, append(res, res1[1:]...) // Concatenate the two partial symbols

... // lots more casesEssentially we're looking at the next character of the input stream (or sometimes 2 characters) and

then deciding how to lex the next token based on that hint. Either we succeed and return the token

type and its value, or we fail and return LEX_ERROR, which bubbles back to the user as a syntax

error. Then the state machine of the parser updates based on this token, and we do it all over again

until there's no more input.

What's interesting about the lexer implementation is that you can use it to overcome limitations of yacc grammars if you're creative. We mentioned earlier that yacc parsers are LALR(1) parsers, and this makes it impossible to parse some constructs in SQL. For example, consider these two select statements:

SELECT * FROM mytable FOR UPDATE;

SELECT * FROM mytable FOR SYSTEM_TIME AS OF '2020-01-01';Being a LALR(1) parser, a naive grammar hits the FOR keyword and can't decide if it's part of FOR

UPDATE or FOR SYSTEM_TIME -- it would need to look ahead one more token to make that

decision. Worse, the complexity of the SELECT syntax makes it pretty much impossible to remove

this ambiguity by inlining rules like we did above. But we can make the parser work by hacking the

grammar to turn FOR SYSTEM_TIME into a single token instead, essentially making it LALR(2) for

just this one special case.

First we define a token and rule for this special double-token:

as_of_point_clause:

AS OF value_expression

{

$$ = &AsOf{Time: $3}

}

| FOR_SYSTEM_TIME AS OF value_expression

{

$$ = &AsOf{Time: $4}

}FOR_SYSTEM_TIME, not FOR SYSTEM_TIME. Then we hack the tokenizer's scanIdentifier() method to

special-case the FOR keyword.

func (tkn *Tokenizer) scanIdentifier(firstByte byte, isDbSystemVariable bool) (int, []byte) {

buffer := &bytes2.Buffer{}

buffer.WriteByte(firstByte)

for isLetter(tkn.lastChar) || isDigit(tkn.lastChar) || (isDbSystemVariable && isCarat(tkn.lastChar)) {

buffer.WriteByte(byte(tkn.lastChar))

tkn.next()

}

lowered := bytes.ToLower(buffer.Bytes())

loweredStr := string(lowered)

keywordID, found := keywords[loweredStr]

if found {

// Some tokens require special handling to avoid conflicts in the grammar.

// This means we're doing additional look-ahead just for these special tokens.

switch keywordID {

case FOR:

token, val := tkn.Scan()

switch token {

case SYSTEM_TIME:

return FOR_SYSTEM_TIME, append(buffer.Bytes(), append([]byte{' '}, val...)...)

default:

tkn.digestToken(token, val)

return FOR, buffer.Bytes()

}

}

return keywordID, buffer.Bytes()

}

return ID, buffer.Bytes()

}When I thought of this solution to the problem I felt pretty dirty, but then I did a little research and found out that MariaDB uses the exact same hack. To me this is an indication that the ISO committee should attempt to implement the grammars they come up with before publishing them, but that ship has sailed I guess. (Temporal queries were added to SQL in 2011).

Supporting different parser modes

Another drawback to yacc grammars is their relative inflexibility: your grammar is statically defined at compile time, and there's no way to modify how they work at runtime. But here again, the lexer can help work around this problem if your domain requires it.

As an example, SQL has a couple different modes that fundamentally alter how the parser identifies string literals and identifiers:

- In ANSI mode, you quote column names and other identifiers with

", and string literals must be delimited with' - In normal mode (the default), string literals can be quoted with either

'or", and you can't quote identifiers with", only with backticks

A customer recently came to us requiring ANSI mode, so we had to make our parser support

it. Fortunately, this problem can be solved entirely in the lexer without grammar changes. All it

requires is the introduction of a mode parameter that changes how string literals and identifiers

are tokenized.

// NewStringTokenizer creates a new Tokenizer for the

// sql string.

func NewStringTokenizer(sql string) *Tokenizer {

buf := []byte(sql)

return &Tokenizer{

buf: buf,

bufSize: len(buf),

identifierQuotes: map[uint16]struct{}{backtickQuote: {}},

stringLiteralQuotes: map[uint16]struct{}{doubleQuote: {}, singleQuote: {}},

}

}

// NewStringTokenizerForAnsiQuotes creates a new Tokenizer for the specified |sql| string, configured for

// ANSI_QUOTES SQL mode, meaning that any double quotes will be interpreted as quotes around an identifier,

// not around a string literal.

func NewStringTokenizerForAnsiQuotes(sql string) *Tokenizer {

buf := []byte(sql)

return &Tokenizer{

buf: buf,

bufSize: len(buf),

identifierQuotes: map[uint16]struct{}{backtickQuote: {}, doubleQuote: {}},

stringLiteralQuotes: map[uint16]struct{}{singleQuote: {}},

}

}Then in the giant switch statement that decides how to lex the next token, we changed:

case '\'', '"':

return tkn.scanString(ch, STRING)To:

case contains(tkn.stringLiteralQuotes, ch):

return tkn.scanString(ch, STRING)Golang's case statements being very flexible made this an easy change.

If your runtime flexibility is much more demanding than the above example, you may need to maintain

two separate, slightly different grammars that you keep in sync. There are versions of yacc that

introduce #ifdef style constructs to produce different forms of the same base parser based on

flags, but we don't have any experience with these and how well they play with golang and goyacc.

Conclusion

Writing parsers with goyacc has been a very interesting puzzle that sometimes has had us crawling up the walls with frustration. But it's still probably better than writing a recursive descent parser from scratch. Hopefully someone who needs the exact kind of hints we spell out here finds this letter in a bottle and is saved.

Have questions about Dolt or goyacc? Join us on Discord to talk to our engineering team and meet other Dolt users.