Using SQLAlchemy for Dolt Time Travel

A customer was struggling with how to use standard ORM tools, like SQLAlchemy, to access Dolt time travel features across schema changes.

One specific problem area is that combining app ORM/schema tools with Dolt time travel features has been very punishing.

We were concerned. Once we dug in, we realized SQLAlchemy Database Introspection, or as it was previously called reflection, is a really elegant way to access Dolt time travel features across schema changes. This blog will walk you through a detailed example of how to access history using SQLAlchemy.

How to Time Travel in Dolt

Dolt is the world's first version controlled SQL database. Dolt adopts the Git model of version control but the target is SQL tables, not files. When you want a version to be able to be accessed via time travel you make a Dolt commit, not to be confused with a transaction commit.

Dolt uses Git's concept of a revision. A revision can be a branch name, a tag name, a commit hash, or some special keywords like HEAD or WORKING. Revisions can also have modifiers like ^ and ~. Dolt tries it's best to match Git revisions. As of today, Dolt is missing short hashes as a means of specifying a revision, but otherwise should match Git.

Once you've identified the revision of the Dolt database you would like to access, there are a few ways to access the data and schema stored within the revision.

Checkout a branch

You can create a branch at any revision using dolt checkout on the command line or call dolt_checkout() using the SQL interface. Of all these options, checkout is the one you want to use if you intend to make writes to your revision.

call dolt_checkout('-b', 'newbranch', 'doltc1cgkfdf4ns2a1pd6j37pclivtbp');This creates a new branch called newbranch at commit doltc1cgkfdf4ns2a1pd6j37pclivtbp and your SQL session can now read and write to the database as it existed at that commit.

AS OF Queries

If you only want to read from a revision, Dolt supports the AS OF <revision> query syntax. This was added to the SQL standard to support temporal tables. All tables in Dolt are temporal tables.

select * from t as of 'doltc1cgkfdf4ns2a1pd6j37pclivtbp';

select * from t as of 'newbranch';This query reads table t at revision doltc1cgkfdf4ns2a1pd6j37pclivtbp and branch newbranch respectively. These would return the same output assuming newbranch had not been modified.

Revision Databases

Dolt also supports revision database specifications. By appending /<revision> to your database name, Dolt will access that database at that revision. So you can USE a database revision or fully qualify tables using database revisions.

use `db/doltc1cgkfdf4ns2a1pd6j37pclivtbp`;

show create table `db/newbranch.t`;This is the most flexible way to access revisions as you can have multiple revisions being accessed in the same query.

select * from db/main.a natural join db/newbranch.t;The Problem

When databases change schema, your application needs to be aware of the new schema. If your application is not aware of the new schema, queries will fail. For example, if you rename a column from lastname to last_name, code that refers to the old name must change or you'll get a column not found error.

This problem has typically been referred to as the database migration problem. Some database tools are capable of handling multiple schemas so you don't have to change your database and code at the same time. In SQLAlchemy, the way they achieve this is database introspection, aka reflection. SQLAlchemy will inspect the schema of the database and change the objects it returns accordingly. This allows you to run the same code on multiple versions of the schema.

In Dolt, it is possible to time travel across schemas using the time travel methods listed above. Using these methods exposes your application to the same problem as traditional database migrations. Your application now needs to understand multiple schemas. As you might have guessed, using database introspection is a good way to solve the time travel problem as well.

The Dolt Database

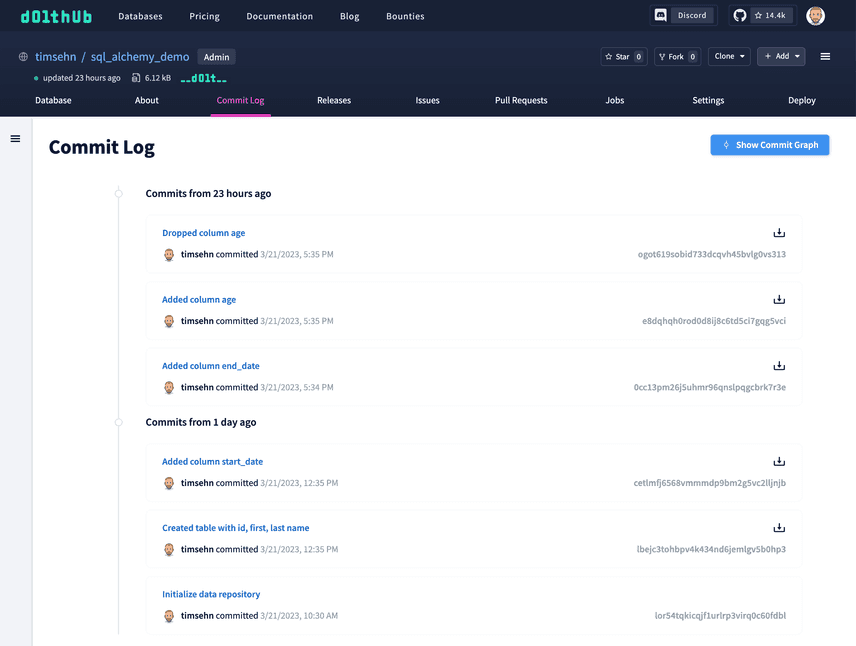

For this demonstration I needed a Dolt database with some schema changes. I made this demo database with a single employees table. The employees table has a different schema across a number of commits. The table is empty as that is not important for this demo.

You'll need to clone it and start a dolt sql-server if you want to recreate the demo. The good news is that in Dolt sharing databases is really easy.

$ dolt clone timsehn/sql_alchemy_demo

cloning https://doltremoteapi.dolthub.com/timsehn/sql_alchemy_demo

$ cd sql_alchemy_demo

$ dolt sql-server

Starting server with Config HP="localhost:3306"|T="28800000"|R="false"|L="info"|S="/tmp/mysql.sock"The Python Code

Now, we set out to show how to use SQLAlchemy reflection to grab the varying schemas so our application can change behavior accordingly.

Grab the Dependencies

Python is kind of a dependency mess. We only need sqlalchemy and mysql-connector-python for this demo. mysql-connector-python is a native Python MySQL client so it does not require MySQL to be installed.

pip install sqlalchemy

pip install mysql-connector-pythonThe code

The code can be found in this GitHub repository. It's 27 lines. First we connect to Dolt. Then, we query the dolt_log system table to find all commits. Then we loop through all the commits and use reflection combined with the revision database method of accessing Dolt history to infer the schema of the tables at every commit. We then print out the columns in the employees table.

from sqlalchemy import create_engine, MetaData, Table, Column, select, text

engine = create_engine(

"mysql+mysqlconnector://root@127.0.0.1:3306/sql_alchemy_demo")

con = engine.connect()

metadata_obj = MetaData()

dolt_log = Table("dolt_log", metadata_obj, autoload_with=engine)

stmt = select(dolt_log.c.commit_hash).order_by(dolt_log.c.date.desc())

results_proxy = con.execute(stmt)

for row in results_proxy.fetchall():

commit = row[0]

print(commit)

# Go back in time.

database_spec = "sql_alchemy_demo/" + commit

metadata_obj = MetaData(schema=database_spec)

metadata_obj.reflect(engine)

try:

employees_table = Table("employees", metadata_obj, autoload_with=engine)

for c in employees_table.c:

print(c)

except:

print("employees table not found")

passThe Result

Running this script outputs the following:

$ python demo.py

ogot619sobid733dcqvh45bvlg0vs313

employees.id

employees.last_name

employees.first_name

employees.start_date

employees.end_date

e8dqhqh0rod0d8ij8c6td5ci7gqg5vci

employees.id

employees.last_name

employees.first_name

employees.start_date

employees.end_date

employees.age

0cc13pm26j5uhmr96qnslpqgcbrk7r3e

employees.id

employees.last_name

employees.first_name

employees.start_date

employees.end_date

cetlmfj6568vmmmdp9bm2g5vc2lljnjb

employees.id

employees.last_name

employees.first_name

employees.start_date

lbejc3tohbpv4k434nd6jemlgv5b0hp3

employees.id

employees.last_name

employees.first_name

lor54tqkicqjf1urlrp3virq0c60fdbl

employees table not foundAs you can see, the table's schema evolves throughout history and each column is available and visible in SQLAlchemy.

If you run dolt sql-server with log level debug you will see this reflection runs:

SHOW FULL TABLES FROM `sql_alchemy_demo/lbejc3tohbpv4k434nd6jemlgv5b0hp3`

SHOW CREATE TABLE `sql_alchemy_demo/lbejc3tohbpv4k434nd6jemlgv5b0hp3`.`employees`SQLAlchemy is inspecting the schema using SHOW statements when you run metadata_obj.reflect(engine) and changing the underlying schema of its objects in accordance with what it sees.

Using revision databases and database introspection makes accessing different schemas easy in SQLAlchemy.

How to Extend this in Practice

In the example provided, we only printed out the columns of the employees table. In a real-world scenario, you would need to adapt your application logic to handle different schema versions. This is admittedly a pain. Cluttering your code with if or case statements may not be ideal. There is a trade off between making your application history aware and complexity of the code. However, SQLAlchemy makes this about as painless as it can be by generating most of the code for you from the historical schema.

Conclusion

Using revision databases and database introspection makes accessing Dolt time travel features easy in SQLAlchemy. You still need to adapt your application logic to understand the historical schemas in the Dolt database. Further questions about how to use Dolt to back your Python applications? We're really responsive on our Discord. Hit us up there.