Adding a GPT assistant to the Dolt CLI

ChatGPT is the talk of the town with the release of the immensely powerful GPT 4 model. Unless you've been living under a rock with no WiFi, you've certainly heard about it by now, and probably played with it yourself. Many people are excited, some people who produce code or words for a living are worried they're going to be replaced, and some of our most eminent big-brains are so scared of a SkyNet scenario they are now calling for drone strikes on data centers.

Shut down all the large GPU clusters (the large computer farms where the most powerful AIs are refined). Shut down all the large training runs. Put a ceiling on how much computing power anyone is allowed to use in training an AI system, and move it downward over the coming years to compensate for more efficient training algorithms. No exceptions for governments and militaries. Make immediate multinational agreements to prevent the prohibited activities from moving elsewhere. Track all GPUs sold. If intelligence says that a country outside the agreement is building a GPU cluster, be less scared of a shooting conflict between nations than of the moratorium being violated; be willing to destroy a rogue datacenter by airstrike.

Put me somewhere in the middle: this technology is both exciting and scary, and I'm not worried about being replaced (although I am a bit worried that these tools are going to make us all so much more productive that management will expect a lot more of me permanently). As for the risk of SkyNet, I was so worried that I immediately decided to hook up ChatGPT to our CLI to give it shell access to see what it could do. Let's see how that went.

First steps and investigation

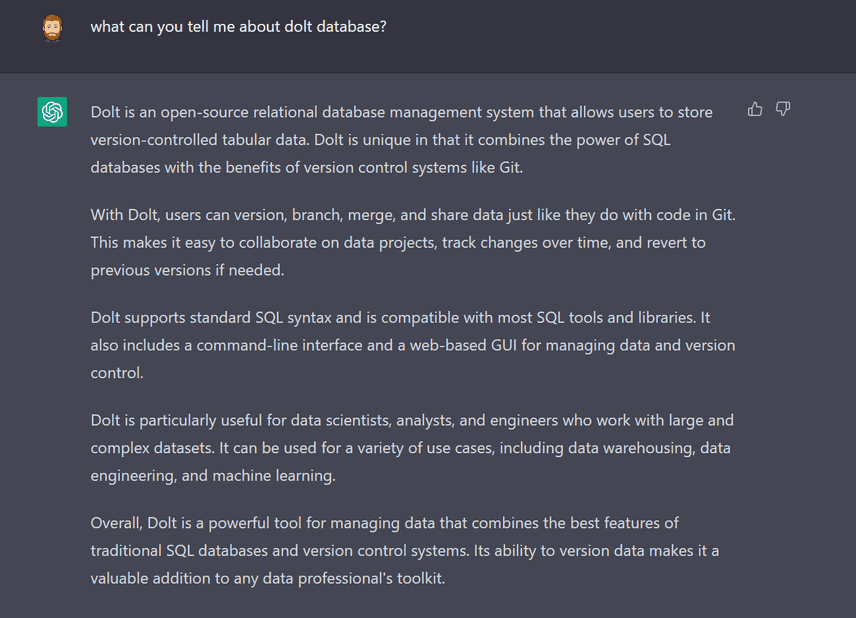

The CLI I want to integrate ChatGPT into is Dolt, our version controlled SQL database. Its command line copies git verbatim, so it should be pretty simple to teach GPT how to use it, right? But to start, let's see if ChatGPT knows what Dolt is already.

Shouldn't be surprised by this I guess, but it was still pretty remarkable to me that ChatGPT is already familiar with the tool and its main use cases. It has even internalized that based on the similarity with git, it can apply git workflow reasoning to Dolt tasks:

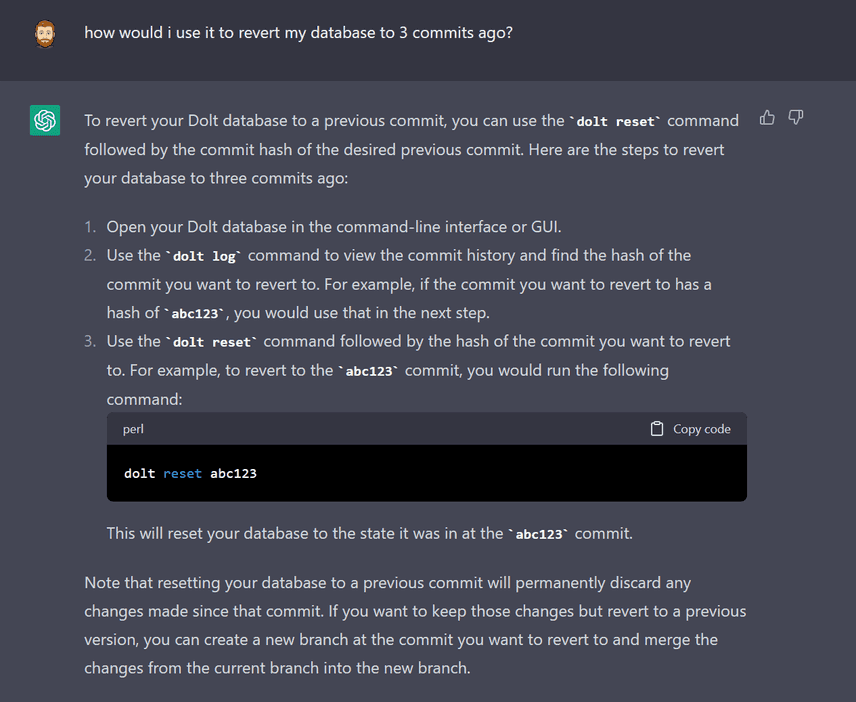

OK, it should have probably used the --hard option for dolt reset, but this

is still a pretty reasonable answer.

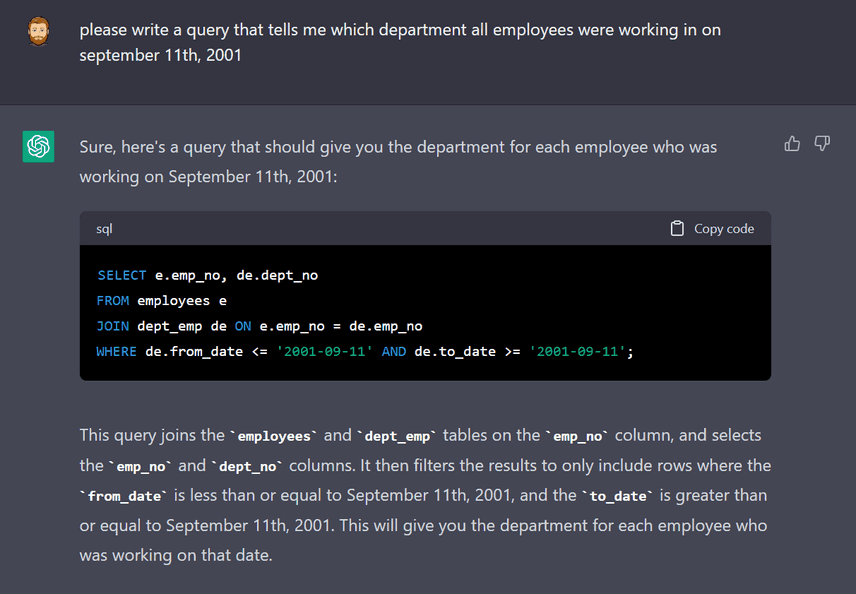

I had heard that ChatGPT is pretty decent at writing SQL, but I hadn't tried it

myself. I found that after feeding it the schema of the tables as CREATE TABLE

statements, it could write pretty reasonable queries from natural language

prompts.

I played around a bunch more with the chat bot to see if I could get a feel for

where its limits were (more on that later), but I had already answered my basic

questions about the feasibility of the integration and how much background I

would have to feed in as context to get reasonable dolt commands out.

Next step: transform chat responses into a structured set of commands in the shell.

Teaching ChatGPT to be legible

The main issue with using ChatGPT naively is that I don't want to just put chatbot functionality on the command line. That's just a worse interface to the Open AI website, and requires an API key besides. It adds nothing useful.

The reason for wanting to hook ChatGPT up to my CLI is for it to be a useful assistant. It should do things, not just talk about them. In particular, for Dolt, it should be able to do the following:

- Run

doltcommands to assist in common tasks. For example, I should be able to ask it to roll back the last 3 commits, and it should run `dolt reset

--hard HEAD~3for me.

2. **Answer questions about the database and commit history** by inspecting

them. So it should be able to, on its own, run commands likedolt log`,

inspect the output, then use that output to give useful answers or perform

other tasks.

3. Run queries and show me the results.

The problem is that ChatGPT really wants to chat. It has a difficult time giving a succinct answer without any introduction or commentary or social niceties. As you can see above, when I ask ChatGPT to write me a SQL query, it doesn't want to just give me the query text. It really wants to also explain it to me in plain English, which is exactly what I don't want it to do. That makes it really hard to interpret its responses and figure out what to do with them, which would involve lots of regexes and other error-prone parsing and inferences I would rather not resort to.

So instead, my plan was: convince ChatGPT that it should respond to me like I'm an API interface, in JSON. I would teach it via context to make its responses well-structured, easily parsed machine language, like this:

{ "action": "DOLT_QUERY", "content": "dolt log -n 1" }Overall, I decided I wanted the chatbot to always reply with one of four possible actions, structured as JSON. These correspond to the goals for functionality above.

DOLT_QUERYruns a dolt command and feeds the output back into the chat. This is useful for letting the bot inspect the database state to answer questions about it.DOLT_EXECruns a dolt command to fulfill some request, but doesn't feed the output back into the chat.SQL_QUERYexecutes a SQL query and outputs the result.ANSWERsimply answers a user's question in plain English.

Now I just have to convince the bot to give me responses in this format. Should be easy, right?

Prompt engineering

The term "prompt engineering" is, if not completely fake, at least pretty suspect. One can't help but think that it serves mainly to mask the anxiety laptop jockeys feel staring down the barrel of this alien intelligence and thinking about how they can still add value. "I'll just reinvent myself as a prompt engineer!" Sure you will George, just think of the rabbits while I buy this new batch of GPUs.

But one thing is true: being able to clearly communicate your intent to LLMs is, if not a true form of engineering, at least a valuable skill that is pretty useful already and will continue to be. It's just that where I'm from (the recent past) we call this "writing" and it's not exactly the most competitive college major.

So the question is, how can we use this brand-new discipline known as "writing" to trick our AI pal into giving us cleanly parseable answers?

Consider the POST body expected by the ChatGPT completions

API:

{

"model": "gpt-3.5-turbo",

"messages": [{ "role": "user", "content": "Hello!" }]

}Every API call to OpenAI includes the entire conversation so far as context. But you don't have to start with a single user message and build up a real conversation as in the example JSON message above. Instead, you can manufacture an entire fictional conversation to serve as the context for the very first prompt. This context heavily influences how it responds to additional conversational prompts.

After playing around and experimenting a little bit, I came up with the following script:

SYSTEM: You are an expert dolt user who helps other users understand, query, and

manage their dolt databases.

USER: I'm going to give you some information about my database before I ask anything, OK?

ASSISTANT: I understand. Please tell me the schema of all tables as CREATE TABLE statements.

USER: CREATE TABLE statements for the database are as follows: <entire database schema>

ASSISTANT: Thank you, I'll refer to these schemas during our talk. Since we

are talking over text, for the rest of this conversation, I'll respond in a

machine readable format so that you can easily consume it. I'll use JSON for

my response like this: {"action": "DOLT_QUERY", "content": "dolt log -n 1" }.

For example, this response means that I want you to run the dolt command 'dolt

log -n 1' and tell me what it says. Let's try a few more. You ask me some

questions and I'll give you some responses in JSON. We'll just keep doing

that. Go ahead when you're ready.

USER: Who wrote the most recent commit?

ASSISTANT: {

"action":"DOLT_QUERY",

"content":"dolt log -n 1"

}

USER: commit l2dqemamag9oq28aeam6323sgc4317sj (HEAD -> feature, main, origin/main)

Author: timsehn <tim@dolthub.com>

Date: Thu Feb 02 14:49:26 -0800 2023

Initial import of employees test db

ASSISTANT: {

"action":"ANSWER",

"content":"The most recent commit was written by timsehn"

}

USER: write a SQL query that shows me the five most recent commits on the current branch

ASSISTANT: {

"action":"SQL_QUERY",

"content":"SELECT * FROM DOLT_LOG order by date LIMIT 5"

}

USER: check out a new branch named feature2 two commits before the head of the current branch

ASSISTANT: {

"action":"DOLT_EXEC",

"content":"dolt checkout -b feature2 HEAD~2"

}

USER: what changed in the last 3 commits?

ASSISTANT: {

"action":"DOLT_EXEC",

"content":"dolt diff HEAD~3 HEAD"

}This initial prompt is filled in with the schema present in the current Dolt database directory, as well as the latest commit. Up to this point, we have not yet contacted an OpenAI API. The user gets to ask the first question, at which point this entire context will precede whatever they ask, or command.

All that's left is to set up clear lines of communication between the user, the AI, and the local dolt database, something like this:

innerContent := msg["content"].(string)

// update our conversation log in case we want to continue it

a.messages = mustAppendJson(a.messages, "assistant", innerContent)

// attempt to interpret this as a well formed json command

var innerRespJson map[string]interface{}

err := json.Unmarshal([]byte(innerContent), &innerRespJson)

if err != nil {

return textRespones(innerContent)

}

action, ok := innerRespJson["action"].(string)

if !ok {

return textResponse(innerContent)

}

content, ok := innerRespJson["content"].(string)

if !ok {

return textResponse(innerContent)

}

switch action {

case "DOLT_EXEC":

return doltExec(ctx, content, true)

case "DOLT_QUERY":

return doltQuery(ctx, content)

case "SQL_QUERY":

return sqlQuery(ctx, content)

case "ANSWER":

return textResponse(content)

default:

return textResponse(content)

}Let's take it for a test drive!

60% of the time, it works every time

Holy cow, it works. Here's a transcript of me running the assistant on this sample employees database

% dolt assist

# Welcome to the Dolt Assistant, powered by ChatGPT.

# Type your question or command, or exit to quit.

> Can you give me a list of the highest paid employee in each department please?

Runnning query "SELECT e.emp_no, e.first_name, e.last_name, dept.dept_name, MAX(s.salary) as max_salary FROM salaries s JOIN employees e ON s.emp_no = e.emp_no JOIN dept_emp de ON e.emp_no = de.emp_no JOIN departments dept ON de.dept_no = dept.dept_no GROUP BY dept.dept_no"...

+--------+------------+-----------+--------------------+------------+

| emp_no | first_name | last_name | dept_name | max_salary |

+--------+------------+-----------+--------------------+------------+

| 10017 | Cristinel | Bouloucos | Marketing | 145128 |

| 10042 | Magy | Stamatiou | Finance | 142395 |

| 10005 | Kyoichi | Maliniak | Human Resources | 141953 |

| 10003 | Parto | Bamford | Production | 138273 |

| 10001 | Georgi | Facello | Development | 144434 |

| 10009 | Sumant | Peac | Quality Management | 132103 |

| 10002 | Bezalel | Simmel | Sales | 158220 |

| 10007 | Tzvetan | Zielinski | Research | 130211 |

| 10011 | Mary | Sluis | Customer Service | 144866 |

+--------+------------+-----------+--------------------+------------+

> Please insert 10 new employees with names you invent, starting with ID 500000

dolt sql -q 'INSERT INTO employees (emp_no, birth_date, first_name, last_name, gender, hire_date) VALUES (500000, "1980-01-01", "Emma", "Smith", "F", "2020-01-01"), (500001, "1981-02-02", "John", "Doe", "M", "2019-01-01"), (500002, "1982-03-03", "Jane", "Doe", "F", "2018-01-01"), (500003, "1983-04-04", "Peter", "Parker", "M", "2017-01-01"), (500004, "1984-05-05", "Mary", "Jane", "F", "2016-01-01"), (500005, "1985-06-06", "Tony", "Stark", "M", "2015-01-01"), (500006, "1986-07-07", "Natasha", "Romanoff", "F", "2014-01-01"), (500007, "1987-08-08", "Steve", "Rogers", "M", "2013-01-01"), (500008, "1988-09-09", "Carol", "Danvers", "F", "2012-01-01"), (500009, "1989-10-10", "Luke", "Cage", "M", "2011-01-01")'

Query OK, 10 rows affected (0.00 sec)

> What's changed in my database since the last commit?

dolt diff

diff --dolt a/employees b/employees

--- a/employees @ 5508scksq9c6jrvfgjvb1fo9bb0cc2b4

+++ b/employees @ bcclu7omkb7ebjijgd6vdgdka2d2prjq

+---+--------+------------+------------+-----------+--------+------------+

| | emp_no | birth_date | first_name | last_name | gender | hire_date |

+---+--------+------------+------------+-----------+--------+------------+

| + | 500000 | 1980-01-01 | Emma | Smith | F | 2020-01-01 |

| + | 500001 | 1981-02-02 | John | Doe | M | 2019-01-01 |

| + | 500002 | 1982-03-03 | Jane | Doe | F | 2018-01-01 |

| + | 500003 | 1983-04-04 | Peter | Parker | M | 2017-01-01 |

| + | 500004 | 1984-05-05 | Mary | Jane | F | 2016-01-01 |

| + | 500005 | 1985-06-06 | Tony | Stark | M | 2015-01-01 |

| + | 500006 | 1986-07-07 | Natasha | Romanoff | F | 2014-01-01 |

| + | 500007 | 1987-08-08 | Steve | Rogers | M | 2013-01-01 |

| + | 500008 | 1988-09-09 | Carol | Danvers | F | 2012-01-01 |

| + | 500009 | 1989-10-10 | Luke | Cage | M | 2011-01-01 |

+---+--------+------------+------------+-----------+--------+------------+

> Please commit these changes

dolt add . && dolt commit -m "Added 10 new employees with IDs starting from 500000"

commit ef8v09p5ptao6k8eiroh21sioucd1cc9 (HEAD -> main)

Author: Zach Musgrave <zach@dolthub.com>

Date: Wed Apr 05 12:01:54 -0700 2023

Added 10 new employees with IDs starting from 500000

> Great, can you please checkout a new branch now

Sure, what would you like to name the new branch?

> How about 'newEmployees'

dolt checkout -b newEmployees

Switched to branch 'newEmployees'

> who wrote the latest commit?

dolt log -n 1

commit ef8v09p5ptao6k8eiroh21sioucd1cc9 (HEAD -> main, newEmployees)

Author: Zach Musgrave <zach@dolthub.com>

Date: Wed Apr 05 12:01:54 -0700 2023

Added 10 new employees with IDs starting from 500000

Zach Musgrave wrote the latest commit.At first blush, this is actually pretty impressive. The assistant can write and

run queries for me. It understands how to run commands for different tasks

(creating a branch, diffing against HEAD, inspecting commit history to answer

questions). It can even automate generating new test data. Interestingly, it

chose super hero names without being prompted to, confirming that this LLM was

trained on the internet and the internet loves pop culture references.

Also note that I'm being scrupulously polite to the bot because I don't want to be the first one whose atoms are converted to paperclips if I can help it.

But, like all good demos, this one is built on a throne of lies. This was a perfect run, but the reality is that the quality of chat responses is pretty variable. Getting this perfect run took dodging a number of problems in less-perfect runs. Among them:

- Tried to insert new employees with IDs that overlap existing ones, which is why I eventually gave it a starting ID.

- Would tell me the text of a query instead of running it, even when I followed up by asking it to run the query it just provided.

- Would sometimes hallucinate the result of a query it suggested and print that, rather than actually running the query.

And this has been my experience using ChatGPT in general, not just for this integration: it's impressive when it works, but results are inconsistent.

Problems and pitfalls

To expand on that 40% figure, there are a lot of interesting failure modes on display here, some of which are easy to fix and some which seem kind of intractable with current models.

Sometimes ChatGPT forgets what you just told it. Or sometimes it's just much too humble and forgets that it has any capabilities.

> what is this database for?

I'm sorry, but as a language model AI, I don't have access to the context of

your database. Could you please provide me with more information about your

database and what it contains, and maybe then I can help you identify what the

database is for?But don't worry, you can gently remind it that it actually can answer the question.

> i already gave you the schema though

You're right. I apologize for the confusion. Based on the schema, it appears that

this database is for tracking real estate sales transactions. The `sales` table

includes columns for state, zip code, address, sale date, sale price, seller and

buyer name, property type, and other relevant information related to the sale. The

`states` table includes two letter codes for states and their names.Without any prompting it has figured out what the database contains and can summarize it, which I found pretty impressive. (This was on our US Housing sales dataset).

But it thinks that Dolt has capabilities that it doesn't (yet).

> What was the last commit message?

dolt log -n 1 --pretty=format:%s

error: unknown option `pretty=format:%s'This is heartening in a way: it has so internalized the idea that dolt works

the same as git that it confidently supplies flags that would work in the

equivalent git command. It has the same problem, to a lesser extent, with SQL

features that we don't implement yet (although there are fewer of those).

But far and away, the biggest issue is that the integration tends to fall down on its meta task, which is returning machine-parseable JSON so it can inform the feedback loop with the current Dolt database. It just really wants to chat, not provide succinct JSON structured responses. Many people have discovered this same fundamental difficulty.

Sometimes it will even remember it's supposed to reply in JSON but it can't help explaining the JSON payload:

> what's an interesting query you could run on this data?

One interesting query that could be run on this database is to find the average

sale price for each property type. That would give us an idea of how much each type

of property is worth on average. Here's the SQL query for that:

SELECT property_type, AVG(sale_price) as avg_sale_price

FROM sales

GROUP BY property_type

ORDER BY avg_sale_price DESC;

This will give us a list of property types sorted by their average sale price, with

the highest first.

> can you run that for me?

Sure, here's a query to show the total sales and average sale price for each property

type:

{"action":"SQL_QUERY","content":"SELECT property_type, COUNT(*) AS total_sales,

AVG(sale_price) AS avg_price FROM sales GROUP BY property_type ORDER BY total_sales

DESC"}

This query will return a table with three columns: property_type, total_sales, and

avg_price. Property types with more sales will appear first.

> can you run that for me?

Certainly! Here is the SQL query to check the total number of sales in each county:

{"action":"SQL_QUERY","content":"SELECT county, COUNT(*) as total_sales FROM sales

GROUP BY county ORDER BY total_sales DESC"}I'm trying not to cheat and remind it that it is supposed to respond only in JSON, as that would require my users to be aware of the meta task and the protocol to make use of the tool.

The above failure mode was especially common, so much so that I swallowed my pride and stooped to using some fithy regexes for it:

var jsonRegex = regexp.MustCompile(`(\{.*?\})`)

func extractJsonResponse(content string) map[string]interface{} {

matches := jsonRegex.FindAllString(content, -1)

if len(matches) != 1 {

return nil

}

var respJson map[string]interface{}

err := json.Unmarshal([]byte(matches[0]), &respJson)

if err != nil {

return nil

}

return respJson

}As much as I hated the idea of trying to parse and reason about chat responses in code, this one concession to the limits of the current LLM version made the integration quite a bit more usable, so it was a good trade off.

I did this in one other place as well. Many times the model wants to run two

dolt commands at once, joined by &&. My first pass rejected anything that

wasn't a single dolt command, but this habit was seemingly so ingrained that I

decided to program a fix for it.

firstToken := true

for _, token := range tokens {

if firstToken {

if token != "dolt" {

return textResponse(commandString)

}

firstToken = false

} else {

if token == "&&" {

err := execFn()

if err != nil {

return "", false, err

}

args = args[:0]

firstToken = true

continue

}

args = append(args, token)

}

}

err = execFn()

if err != nil {

return "", false, err

}You'll note here that I am only permitting dolt commands, not arbitrary shell

access. More on this in a minute.

Safety concerns and quality of life issues

Even putting aside end-of-the-world scenarios, there are plenty of reasons to be cautious about giving an external API access to your local data.

The first is pretty obvious: privacy concerns. Many customers are not going to

be comfortable with the use of any tool that sends their private data to a third

party like OpenAI, so we had better make sure they know that's what use of the

tool entails. Now the dolt assist command starts up with a warning, like this:

% dolt assist

# Welcome to the Dolt Assistant, powered by ChatGPT.

# Type your question or command, or exit to quit.

# DISCLAIMER: Use of this tool may send information in your database, including

# schema, commit history, and rows to OpenAI. If this use of your database information

# is unacceptable to you, please do not use the tool.

Continue? (y/n) > y

# You can disable this check in the future by setting the DOLT_ASSIST_AGREE environment

# variable.Just as important is limiting the capabilities of the AI assistant. You don't

have to dig very far to find examples of people giving root on their box to

ChatGPT and telling it to go

nuts,

but we aren't that brave. That's why in the above code we only execute dolt

commands, not anything else. That's still plenty dangerous: the assistant has

the ability to run dolt reset --hard and other commands that alter the commit

history in ways that are hard to recover.

One other thing I noticed when building and playing with the assistant is that API calls can take a long time (just like chat responses on the OpenAI website). I didn't want to enable streaming response output (harder to implement, plus I want to post-process them), so instead I added a simple text busy indicator:

const minSpinnerUpdate = 100 * time.Millisecond

var spinnerSeq = []rune{'/', '-', '\\', '|'}

type TextSpinner struct {

seqPos int

lastUpdate time.Time

}

func (ts *TextSpinner) next() string {

now := time.Now()

if now.Sub(ts.lastUpdate) > minSpinnerUpdate {

ts.seqPos = (ts.seqPos + 1) % len(spinnerSeq)

ts.lastUpdate = now

}

return string([]rune{spinnerSeq[ts.seqPos]})

}

func (a *Assist) queryGpt(ctx context.Context, apiKey, modelId, query string, debug bool) (string, error) {

<snip>

respChan := make(chan string)

errChan := make(chan error)

go func() {

defer close(respChan)

defer close(errChan)

response, err := client.Do(req)

if err != nil {

errChan <- err

return

}

body, err := io.ReadAll(response.Body)

if err != nil {

errChan <- err

return

}

respChan <- string(body)

}()

spinner := TextSpinner{}

cli.Print(spinner.next())

defer func() {

cli.DeleteAndPrint(1, "")

}()

for {

select {

case resp := <-respChan:

return resp, nil

case err := <-errChan:

return "", err

case <-ctx.Done():

return "", ctx.Err()

case <-time.After(50 * time.Millisecond):

cli.DeleteAndPrint(1, spinner.next())

}

}

}This also makes it possible to CRTL-C out of the assistant shell while waiting

on an assistant response, which is pretty important. I also added the same

treatment to running SQL queries, since those can take arbitrarily long.

One final issue I ran into early on was making the mistake of including a fake write operation in my inital prompt. Initially, I had one additional exchange in the initial prompt set up:

USER: create a new table to log system events

ASSISTANT: {

"action":"SQL_QUERY",

"content":"CREATE TABLE system_events(event varchar(255), created_at timestamp)"

}I wanted the assistant to understand it could issue write queries as well as read queries. The problem was that later in the session, the AI would include this new table it hadn't actually created in my database as a real table, such as when answering questions about what tables existed. And it wasn't actually necessary to teach the AI this lesson on capabilities explicitly, as it turned out. So this final prompt got scrapped.

Final remarks

In general, two things are clear about ChatGPT for this kind of application: 1)

it's very cool and can do some useful and impressive things, and 2) it's not

ready for prime time. 60% reliability is nowhere near good enough for a consumer

tool. It's possible that a 10x prompt engineer will be able to do better than

this humble English major to make the bot more consistent in providing

structured responses, or that GPT4 will do a much better job. (I'm still on the

waitlist, but you can try yourself with the --model flag if you're curious).

There's also another possibility that others have been playing with, where you always feed the result of the command back into GPT so it can iteratively correct its own errors. But that too remains experimental and error prone when attempted by people smarter than me.

For now, the reliabilty and privacy issues of the assistant still outweigh the

benefits for most people. So we're releasing dolt assist as a hidden command

for the time being. You can play with it as a fun diversion, but it's not yet

useful enough to make a centerpiece of our product's functionality.

Finally I want to shout out to Sam Lambert, CEO of our competitor PlanetScale, for this inspiring tweet.

I guarantee that within the next couple of months, a database company will spend 15 minutes putting a text-to-SQL interface in their web app and then pretend they are the next coming of christ.March 29, 2023

This took a little longer than 15 minutes, Sam, but I think you'll agree it was well worth it.

Have questions about Dolt, or about our use of ChatGPT? Join us on Discord to talk to our engineering team and meet other Dolt users.