Announcing Standby Replication

Almost a year ago, we announced the first version of Dolt sql-server replication. In that blog post, we described a replication feature of Dolt sql-server where new Dolt commits on a primary server are replicated to a Dolt remote and reads issued against a read replica pull the latest updates from the Dolt remote in order to serve the latest data. Many features have been added to Dolt replication since then, including options for asynchronous replication and the ability to automatically configure replication for newly created databases on the primary server. And generally, the feature has served our users well, especially with regards to scaling out read traffic against their Dolt databases.

But when our users wanted to use remote-based replication for high availability, it was less clear exactly how to achieve failover to a replica. The pulls from the remote are done on-demand when the branches are accessed, but when you fail over you want to pull all branch HEADs into matching local branches and reconfigure the standby to start replicating to the remote. Additionally, for some of our users, the requirement to create Dolt commits in order to achieve replication did not match the workflows they were ideally striving for, where Dolt commits were made less frequently than SQL transaction COMMITs and had some application-level significance.

For these reasons, we've just released a new type of replication in Dolt sql-server which we call standby replication. This form of replication is fully compatible with Dolt's existing remote-based replication and we recommend it for high availability use cases in particular.

Standby Replication

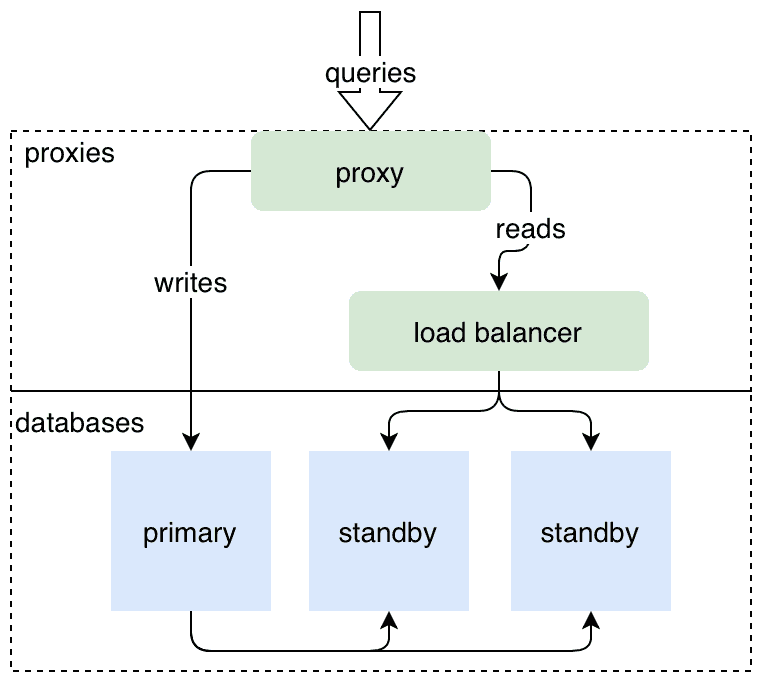

The configuration and behavior of standby replication takes a slightly different approach than that of remote-based replication. With standby replication, a set of sql-servers are configured as a cluster, where each server is configured to replicate to the others and each one can directly accept replication writes from its peers. In addition, each server in the cluster is assigned a role, either primary or standby. There is only one primary at a time and only a primary will accept and replicate writes to the other servers in the cluster. The other servers in the cluster run in the standby role. They will accept read requests and replication from the primary, but they will reject writes from the SQL interface.

Cluster topology will look something like this, if you are also using the replicas to serve read-only traffic:

Example

Let's jump right into an example and see how the new standby replication can be configured and used.

First, we will create two Dolt sql-server instances which will be in a cluster and configured to replicate to each other.

$ mkdir dolt_1

$ cd dolt_1

$ cat > config.yaml <<EOF

heredoc> listener:

heredoc> host: 127.0.0.1

heredoc> cluster:

heredoc> standby_remotes:

heredoc> - name: standby

heredoc> remote_url_template: http://localhost:50052/{database}

heredoc> bootstrap_role: primary

heredoc> bootstrap_epoch: 1

heredoc> remotesapi:

heredoc> port: 50051

heredoc> EOF

$ DOLT_ROOT_PATH=`pwd` dolt sql-server --config config.yaml --data-dir . >/dev/null 2>&1 &

$ dolt_1_pid=$!

$ cd ..

$ mkdir dolt_2

$ cd dolt_2

$ cat > config.yaml <<EOF

heredoc> listener:

heredoc> host: 127.0.0.1

heredoc> port: 3307

heredoc> cluster:

heredoc> standby_remotes:

heredoc> - name: standby

heredoc> remote_url_template: http://localhost:50051/{database}

heredoc> bootstrap_role: standby

heredoc> bootstrap_epoch: 1

heredoc> remotesapi:

heredoc> port: 50052

heredoc> EOF

$ DOLT_ROOT_PATH=`pwd` dolt sql-server --config config.yaml --data-dir . >/dev/null 2>&1 &

$ dolt_2_pid=$!

$ cd ..At this point, each server is running and we can query their roles:

$ echo 'select @@global.dolt_cluster_role' | mysql -u root -h 127.0.0.1 -t

+----------------------------+

| @@GLOBAL.dolt_cluster_role |

+----------------------------+

| primary |

+----------------------------+

$ echo 'select @@global.dolt_cluster_role' | mysql -u root -h 127.0.0.1 --port 3307 -t

+----------------------------+

| @@GLOBAL.dolt_cluster_role |

+----------------------------+

| standby |

+----------------------------+The server running on 3306 is the primary because its configuration had a bootstrap role of primary.

On the primary, we can create a database, create a table and insert some data into it.

$ cat <<EOF | mysql -u root -h 127.0.0.1 -t

CREATE DATABASE sequences;

USE sequences;

CREATE TABLE primes (i int primary key, val int);

INSERT INTO primes VALUES (1,2),(2,3),(3,5),(4,7),(5,11),(6,13),(7,17),(8,19),(9,23),(10,29);

EOFWe can see our new database and its contents on the standby:

$ echo 'select * from primes' | mysql -u root -h 127.0.0.1 --port 3307 sequences -t

+------+------+

| i | val |

+------+------+

| 1 | 2 |

| 2 | 3 |

| 3 | 5 |

| 4 | 7 |

| 5 | 11 |

| 6 | 13 |

| 7 | 17 |

| 8 | 19 |

| 9 | 23 |

| 10 | 29 |

+------+------+And we can query our replication status on the primary to ensure everything is going well:

$ echo 'select * from dolt_cluster_status' | mysql -u root -h 127.0.0.1 dolt_cluster -t

+-----------+----------------+---------+-------+------------------------+----------------------------+---------------+

| database | standby_remote | role | epoch | replication_lag_millis | last_update | current_error |

+-----------+----------------+---------+-------+------------------------+----------------------------+---------------+

| sequences | standby | primary | 1 | 0 | 2022-10-17 00:00:07.623518 | NULL |

+-----------+----------------+---------+-------+------------------------+----------------------------+---------------+If we attempt to perform a write against our database on the standby, we will receive an error:

$ echo 'insert into primes values (11,31)' | mysql -u root -h 127.0.0.1 --port 3307 sequences -t

ERROR 1105 (HY000) at line 1: Database sequences is read-only.Performing a Failover

We can perform a failover so that the server running on 3307 becomes the new primary and starts accepting writes and replicating them to its standby. We can change the role of a server using CALL dolt_assume_cluster_role(...). First we will make the primary into a standby and then make the old standby into the primary:

$ echo "call dolt_assume_cluster_role('standby', 2)" | mysql -u root -h 127.0.0.1 dolt_cluster -t

+--------+

| status |

+--------+

| 0 |

+--------+

$ echo "call dolt_assume_cluster_role('primary', 2)" | mysql -u root -h 127.0.0.1 --port 3307 dolt_cluster -t

+--------+

| status |

+--------+

| 0 |

+--------+Now the roles are reversed and the server running on port 3307 accepts writes and replicates them to the server running on port 3306:

$ echo 'select @@global.dolt_cluster_role' | mysql -u root -h 127.0.0.1 -t

+----------------------------+

| @@GLOBAL.dolt_cluster_role |

+----------------------------+

| standby |

+----------------------------+

$ echo 'select @@global.dolt_cluster_role' | mysql -u root -h 127.0.0.1 --port 3307 -t

+----------------------------+

| @@GLOBAL.dolt_cluster_role |

+----------------------------+

| primary |

+----------------------------+

$ echo 'insert into primes values (11,31)' | mysql -u root -h 127.0.0.1 --port 3307 sequences -t

$ echo 'select * from primes' | mysql -u root -h 127.0.0.1 sequences -t

+------+------+

| i | val |

+------+------+

| 1 | 2 |

| 2 | 3 |

| 3 | 5 |

| 4 | 7 |

| 5 | 11 |

| 6 | 13 |

| 7 | 17 |

| 8 | 19 |

| 9 | 23 |

| 10 | 29 |

| 11 | 31 |

+------+------+Monitoring Replication Failure

Lastly, we can see what it looks like when replication is failing and how that recovers once the peer comes back up. First, let's shut down the server running on port 3306 so that replication starts failing.

$ kill "$dolt_1_pid"Then let's insert some rows into the primes table and query the status table to see that replication is not working.

$ echo 'insert into primes values (12,37),(13,41)' | mysql -u root -h 127.0.0.1 --port 3307 sequences -t

$ sleep 20

$ echo 'select * from dolt_cluster_status' | mysql -u root -h 127.0.0.1 --port 3307 dolt_cluster -t

+-----------+----------------+---------+-------+------------------------+----------------------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

| database | standby_remote | role | epoch | replication_lag_millis | last_update | current_error |

+-----------+----------------+---------+-------+------------------------+----------------------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

| sequences | standby | primary | 2 | 23221 | 2022-10-17 00:00:56.651826 | failed to commit chunks on destDB: rpc error: code = Unavailable desc = connection error: desc = "transport: Error while dialing dial tcp 127.0.0.1:50051: connect: connection refused" |

+-----------+----------------+---------+-------+------------------------+----------------------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+There you can see that replication_lag_millis is growing and that current_error is non-NULL. Let's start dolt_1 again and see the errors go away and the latest writes replicate:

$ cd dolt_1

$ DOLT_ROOT_PATH=`pwd` dolt sql-server --config config.yaml --data-dir . >/dev/null 2>&1 &

$ dolt_1_pid=$!

$ cd ..

$ echo 'select * from dolt_cluster_status' | mysql -u root -h 127.0.0.1 --port 3307 dolt_cluster -t

+-----------+----------------+---------+-------+------------------------+----------------------------+---------------+

| database | standby_remote | role | epoch | replication_lag_millis | last_update | current_error |

+-----------+----------------+---------+-------+------------------------+----------------------------+---------------+

| sequences | standby | primary | 2 | 0 | 2022-10-18 20:47:06.192442 | NULL |

+-----------+----------------+---------+-------+------------------------+----------------------------+---------------+

$ echo 'select * from primes' | mysql -u root -h 127.0.0.1 sequences -t

+------+------+

| i | val |

+------+------+

| 1 | 2 |

| 2 | 3 |

| 3 | 5 |

| 4 | 7 |

| 5 | 11 |

| 6 | 13 |

| 7 | 17 |

| 8 | 19 |

| 9 | 23 |

| 10 | 29 |

| 11 | 31 |

| 12 | 37 |

| 13 | 41 |

+------+------+And let's cleanup the servers so they don't linger.

$ kill $dolt_1_pid $dolt_2_pidNext Steps and Conclusion

So that's a brief overview of standby replication and failover in the latest versions of Dolt. You should check out our reference documentation on standby replication for further details about how to configure and operate Dolt with standby replication.

For now, there are a few caveats to keep in mind while we continue to deliver functionality and features for standby replication:

-

Users and grants do not replicate over standby replication. Alterations to these should be applied on all servers.

-

Similarly,

DROP DATABASEdoes not currently replicate over standby replication. The standby replicas will continue to serve the database even after it is dropped on the primary. As a work around, theDROP DATABASEcommand can be applied on the standbys while they are in standby mode. -

We do not currently have documentation around how to lock down permissions on the

dolt_assume_cluster_rolestored procedure. If you need such functionality, please follow up on this issue. -

Lastly, but perhaps most importantly depending on your deployment scenario, is that transport layer security and authorization on the server used for the replication protocol itself is not currently implemented. Currently, the only way to deploy standby replication securely in any network environment where every agent is not fully trusted is to deploy it so that all traffic to and from the replication server (

cluster.remotesapi.portin theconfig.yaml) is mediated by an authenticating tunnel or a firewall, something like stunnel or envoy using mTLS and an internal PKI, for example. If you have requirements here, please follow up on this issue.

Besides those enhancements, we are currently working on example deployments and various control plane agents which help with running higher-availability Dolt clusters in environments like Kubernetes. Stay tuned for details!