Debugging Deadlocks in Dolt

Here at DoltHub we're building a database. Dolt is the only SQL database you can branch, merge, diff and clone. It's a MySQL compatible database with version control. As Dolt matures, we're continually improving its stability and reliability. Our goal is to be a drop-in replacement for MySQL in every aspect of performance and reliability. Today we're doing a deep-dive on a Dolt bug discovered in the wild.

The Setup

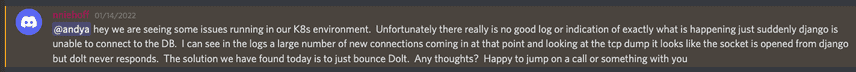

A few weeks ago we got a message from a customer who was experiencing an operational issue with Dolt:

After a short period of normal behavior, the database in question stopped responding. New connections were being

accepted, but queries were not returning results and seemingly weren't being executed. The active connection count

continued to increase, eventually crashing the host when a max connection limit was reached. Metrics from the host

indicated that the Dolt process was consuming typical amounts of memory and minimal CPU. These initial clues weren't

going to be enough to diagnose the issue; we needed more fine-grained information about the state of the process.

Thankfully, Golang has some excellent tooling around inspecting and measuring its runtime. Simply sending a

pkill -SIGABRT $PID to the offending process will cause it to shut down and log the state of all its goroutines.

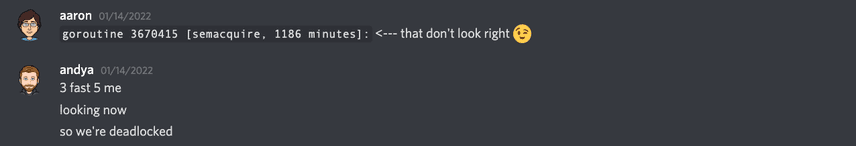

Our customer was kind enough to do this for us and send us the logs. Some fishy locking behavior immediately stood out:

Whodunit!?

Upon further inspection of the logs, we found many such instances of goroutines blocked trying to acquire the same lock. The stack trace for these goroutines looked like:

created by github.com/dolthub/vitess/go/mysql.(*Listener).Accept

/go/pkg/mod/github.com/dolthub/vitess@v0.0.0-20211112215933-1feb51c6c461/go/mysql/server.go:448 +0x1557

github.com/dolthub/vitess/go/mysql.(*Listener).handle(0xc0001995f0, {0x1ce3f08, 0xc002346098}, 0x2a35, {0xc0704ef28e01e191, 0x1197007c9c3f, 0x2a28f40})

/go/pkg/mod/github.com/dolthub/go-mysql-server@v0.11.1-0.20211118151213-d685afc5e3da/server/handler.go:83 +0x25

github.com/dolthub/go-mysql-server/server.(*Handler).ComInitDB(0xc00003b3c0, 0xc002738780, {0xc002738788, 0xc000f9c3a8})

/go/pkg/mod/github.com/dolthub/go-mysql-server@v0.11.1-0.20211118151213-d685afc5e3da/server/context.go:113 +0x45

github.com/dolthub/go-mysql-server/server.(*SessionManager).SetDB(0xc001052000, 0x432d10, {0xc002738788, 0x8})

/go/pkg/mod/github.com/dolthub/go-mysql-server@v0.11.1-0.20211118151213-d685afc5e3da/server/context.go:138 +0x7a

github.com/dolthub/go-mysql-server/server.(*SessionManager).getOrCreateSession(0xc001052000, {0x1cb78a8, 0xc000044020}, 0xc002deb5e0)

/usr/local/go/src/sync/mutex.go:81

sync.(*Mutex).Lock(...)

/usr/local/go/src/sync/mutex.go:138 +0x165

sync.(*Mutex).lockSlow(0xc001062a30)

/usr/local/go/src/runtime/sema.go:71 +0x25

sync.runtime_SemacquireMutex(0x18, 0x28, 0x7f9d19edb5b8)

goroutine 3670889 [semacquire, 1180 minutes]:New connections were established, but were immediately stopped in their tracks while trying to create a session. This was consistent with the steadily increasing connection count we observed and further confirmed our hypothesis that the database was deadlocked. So the question then became: how?

Red Herrings:

Auditing the locking code in the session manager revealed some suspicious patterns:

func (s *SessionManager) getOrCreateSession(ctx context.Context, conn *mysql.Conn) (sql.Session, error) {

s.mu.Lock()

defer s.mu.Unlock()

sess, ok := s.sessions[conn.ConnectionID]

if !ok {

s.mu.Unlock()

err := s.NewSession(ctx, conn)

s.mu.Lock()

if err != nil {

return nil, err

}

sess = s.sessions[conn.ConnectionID]

}

return sess.session, nil

}The first two lines of the function follow a common golang pattern for handling locks. For more details on the defer

statement, check out this example from the Golang team. The problem comes later in the

function, when we again touch the lock to call into another function that locks. If anything within NewSession()

panics, it will cause a second successive call to Mutex.Unlock() which will trigger an

unrecoverable panic.

We pushed a fix for this issue, but we didn't have much

confidence that we'd addressed the root cause. In particular, NewSession is called when the lock is not being held.

We needed to keep digging.

Cutting Closer

The best information we had were the stack dumps from the go runtime. A closer inspection of these logs showed that there was actually more than one lock that was being blocked on:

/go/pkg/mod/github.com/dolthub/vitess@v0.0.0-20211112215933-1feb51c6c461/go/mysql/server.go:266 +0x29

created by github.com/dolthub/vitess/go/mysql.(*Listener).Accept

/go/pkg/mod/github.com/dolthub/vitess@v0.0.0-20211112215933-1feb51c6c461/go/mysql/server.go:474 +0x19fa

github.com/dolthub/vitess/go/mysql.(*Listener).handle(0xc0001995f0, {0x1ce3f08, 0xc00000e008}, 0x29ec, {0xc0704e7b2616500d, 0x1127cd8bdebb, 0x2a28f40})

/go/pkg/mod/github.com/dolthub/go-mysql-server@v0.11.1-0.20211118151213-d685afc5e3da/server/handler.go:116 +0x90

github.com/dolthub/go-mysql-server/server.(*Handler).ConnectionClosed(0xc000410380, 0xc0025b0000)

/go/pkg/mod/github.com/dolthub/go-mysql-server@v0.11.1-0.20211118151213-d685afc5e3da/sql/analyzer/catalog.go:124 +0x70

github.com/dolthub/go-mysql-server/sql/analyzer.(*Catalog).UnlockTables(0xc00003b8c0, 0x29ec, 0x0)

/usr/local/go/src/sync/rwmutex.go:111 +0x36

sync.(*RWMutex).Lock(0x40d207)

/usr/local/go/src/sync/mutex.go:81

sync.(*Mutex).Lock(...)

/usr/local/go/src/sync/mutex.go:138 +0x165

sync.(*Mutex).lockSlow(0xc00003b8d8)

/usr/local/go/src/runtime/sema.go:71 +0x25

sync.runtime_SemacquireMutex(0x40ceb4, 0xa5, 0x90)

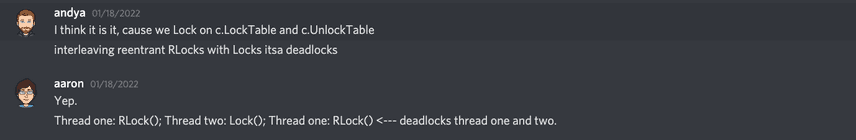

goroutine 3670415 [semacquire, 1186 minutes]:This was in fact the goroutine that has been blocking for the longest duration, suggesting it was at fault. This lock

belonged to the Catalog,

an abstraction within our SQL engine that keeps track of which Databases are available within a server. When we followed

the code paths for session creation, we found that NewSession() must acquire the Catalog lock while also holding

the SessionManager's lock in order to instantiate a session. The majority of the dumped goroutines were stuck at

SessionManager, but it now seemed clear the deadlock was coming from Catalog.

Git Blaming Yourself

A key difference between the SessionManager's lock and the Catalog's lock is the latter is a

"read-write lock".

Read-write locks allow multiple readers to access a resource at once, but only one writer. We use them throughout our

codebase to reduce lock contention on resources that need to be thread-safe. Their type definition in the golang stdlib

provides more detail:

// A RWMutex is a reader/writer mutual exclusion lock.

// The lock can be held by an arbitrary number of readers or a single writer.

// The zero value for a RWMutex is an unlocked mutex.

//

// A RWMutex must not be copied after first use.

//

// If a goroutine holds a RWMutex for reading and another goroutine might

// call Lock, no goroutine should expect to be able to acquire a read lock

// until the initial read lock is released. In particular, this prohibits

// recursive read locking. This is to ensure that the lock eventually becomes

// available; a blocked Lock call excludes new readers from acquiring the

// lock.

type RWMutex struct {

w Mutex // held if there are pending writers

writerSem uint32 // semaphore for writers to wait for completing readers

readerSem uint32 // semaphore for readers to wait for completing writers

readerCount int32 // number of pending readers

readerWait int32 // number of departing readers

}The operative sentence in this excerpt is In particular, this prohibits recursive read locking.. On the surface,

nested calls to RWMutex.RLock() will work as expected. However, in a concurrent setting this provides the potential

for a deadlock. This was precisely the cause of the

deadlock we were experiencing. Specifically, Catalog.Database() acquired a read-lock after being called within other

Catalog functions that were already holding a read lock on the same mutex. The process was deadlocked when a

write-lock was acquired on the Catalog between these nested read-locks.

The fix was pretty simple in the end. We were able to

just remove the locking calls from Catalog.Database() as its implementation was already thread-safe. Just had to

check one more thing

before we were done. Yep, it was me.

Wrapping Up

A few days after the issue was first reported, we cut a release that included the fix. We followed up with the customer shortly afterwards and things were running smoothly:

If this blog piqued your interest and you'd like to learn more, join us on discord. We're always looking to find new interesting applications for Dolt.