Faster than MySQL? An investigation

Introduction

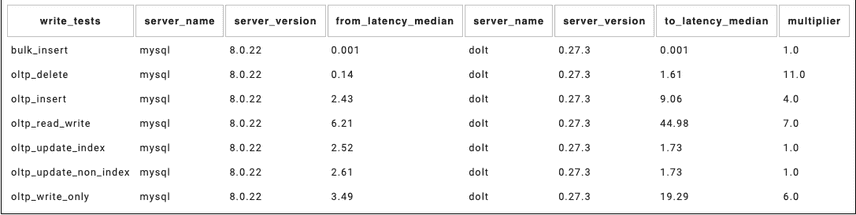

At DoltHub, we are obsessed with Dolt's performance. In the past we've written about Sysbench, our primary benchmarking mechanism for comparing our performance to MySQL's. Every time we run a release of Dolt we run a CI-job that executes the Sysbench test set on both Dolt and MySQL 8.0. Recently, we stumbled across a release that claimed to be substantially faster than MySQL in several tests. While we always welcome significant performance gains, we didn't think this one was real.

In this blog we will cover our investigation into this performance anomaly and what the problem ended up being.

The Discovery and The Challenge

Sysbench has two tests titled oltp_update_index and oltp_update_non_index which simulate around 10,000 updates on a

table with an index and without an index respectively. I've linked an example database later along with a table of the update

queries. On release 0.27.3 we noticed the results on these last two tests were substantially faster than mysql.

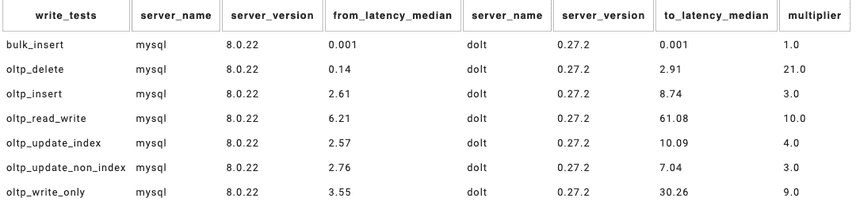

Below is our previous release performance where we were at least 4x slower on these test cases.

Naturally, the gauntlet was thrown down to see who would be the one to figure out this mysterious performance gain. I'd always been eager to delve into performance work, so I decided to take on the challenge.

The Investigation

I knew that the change in Dolt came between releases 0.27.2 and 0.27.3 but looking at our release notes, I wasn't suspicious of any commit in particular. So the first part of the investigation was coming up with a reproducible test case to determine whether a commit was good or bad.

I first approached this by trying to get a copy of the 10,000 insert and update queries that oltp_update_index test case

ran. The annoying part about this was that each test run used randomly generated inserts and updates. So after much head banging,

I was able to get a consistent set of data and update queries loaded into a dolt that0.27.2 and 0.27.3 the test case ran. You can find

that data here.

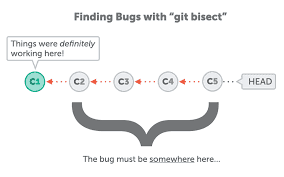

Using git bisect, I ran this test case on commits between release 0.27.2 and 0.27.3

until I found the commit where performance on this test case significantly improved. Unfortunately, all the commits seemed to perform similarly on the

test case. Very frustrating!

I looked back at our original performance results and realized that all of our tests were run back to back on the same

dolt server instance. What if I just made my test case running some portion of the sysbench test suite on one dolt instance My test case then

became running oltp_read_write and then oltp_update_index back to back. As a sanity check I ran this test case on our

latest dolt version and found that the performance anomaly came up.

Now I had my test case to do git bisect with. After a couple of iterations with git bisect I found the offending PR.

It looks like there was something wrong with how we handle auto increment values. I noticed that between the

test cases we were dropping and recreating the same table which used an auto_increment based primary key. So I tried the following test case:

mysql> create table mytable(pk int auto_increment primary key, val int);

Empty set (0.01 sec)

mysql> insert into mytable values (null, 1), (null, 2);

Query OK, 2 rows affected (0.01 sec)

mysql> select * from mytable;

+------+------+

| pk | val |

+------+------+

| 1 | 1 |

| 2 | 2 |

+------+------+

2 rows in set (0.00 sec)

mysql> drop table mytable;

Query OK, 0 rows affected (0.01 sec)

mysql> create table mytable(pk int auto_increment primary key, val int);

Empty set (0.00 sec)

mysql> insert into mytable values (null, 1), (null, 2);

Query OK, 2 rows affected (0.00 sec)

mysql> select * from mytable;

+------+------+

| pk | val |

+------+------+

| 3 | 1 |

| 4 | 2 |

+------+------+

2 rows in set (0.00 sec)We weren't resetting table level auto increment counter between table drops. That means all the update queries in oltp_update_index

and oltp_update_non_index were just no-ops! No wonder they were so fast.

The Fix

I originally wrote our implementation for auto increment tracking across transactions and branches, so I was happy that the bug wasn't mine. But I was in too deep to not go ahead and fix the bug.

As I was reading through the PR, it didn't make sense to me why this issue suddenly started arising. The PR moved our global tracker for auto increment values to a database level abstraction instead of an SQL-session level abstraction. So I decided to go all the way back to when this feature was shipped to see what was going on.

mysql> create table mytable(pk int auto_increment primary key, val int);

Empty set (0.01 sec)

mysql> insert into mytable values (null, 1), (null, 2);

Query OK, 2 rows affected (0.01 sec)

mysql> select * from mytable;

+------+------+

| pk | val |

+------+------+

| 1 | 1 |

| 2 | 2 |

+------+------+

2 rows in set (0.00 sec)

mysql> drop table mytable;

Query OK, 0 rows affected (0.01 sec)

mysql> create table mytable(pk int auto_increment primary key, val int);

Empty set (0.00 sec)

mysql> insert into mytable values (null, 1), (null, 2);

Query OK, 2 rows affected (0.00 sec)

mysql> select * from mytable;

+------+------+

| pk | val |

+------+------+

| 3 | 1 |

| 4 | 2 |

+------+------+

2 rows in set (0.00 sec)Looks like it was me who introduced the bug in the first place. Moving the state to the database level only exposed it some more.

Needless to say I added some small logic to make sure that we did some more accounting whenever a table was dropped.

Conclusion

At DoltHub, we understand that performance flaws get exposed very quickly in infrastructure. That's why we take any performance anomaly extremely seriously. Our goal is to build the most performant database we can. If you're interested in Dolt and using it please join our Discord group here