The Anatomy of Open Data Projects

A core motivation for building DoltHub was to empower organizations to collaboratively create and maintain high quality data assets that they could collectively depend on. This is very much analogous to GitHub. Analogies are powerful ways to articulate an idea when an appropriate one is already widely understood. In our case a large community of developers, and technology adjacent businesses more broadly, understand GitHub, and how it enabled a revolution in open source software. It's therefore straightforward for us to explain our product in terms of an analogy.

The balance of this article is about the, relatively nascent, open data ecosystem, and the similarities and differences with the the open source software ecosystem.

Open Source Software

Collaborative public development of software is not necessary for it to be considered "open source", this is a characteristic of the distribution licensing, but when one uses the term "open source" that is normally what is implicitly understood. Indeed at various points in my career assessing whether to adopt a tool often involved gauging how "vibrant" the "community" around that tool was. What does this mean in specifically?

- gauging the level of mailing list activity, JIRA board back and forth, or GitHub issues to see a healthy interest in the tool, that is a user base

- checking Git commit logs to see if there is a healthy base of contributors

The fundamental thing this kind of analysis seeks to establish is a qualitative sense of whether a healthy dynamic exists around the project. At a fundamental level marginal users must see value in the project, and some fraction of them must be willing to convert to contributors in order to sustain the project. If significant investment is attracted, then the user base can grow as the project in creases in scope and quality, thus becoming a solution to more and more problems. This is the "flywheel" that sustains open source projects that allow companies to drop in pieces of software infrastructure that they might otherwise have to buy or maintain. The existence of the open source alternative allows these companies to compare the cost of buying or building to having a stake in a free to use tool, usually outside their core competency.

This is not a scientific, or exhaustive, theory of open source software project dynamics, but it seems a reasonable first approximation of the dynamic that drives companies to seek to employ open source contributors to projects that constitute a critical part of their infrastructure.

Open Data

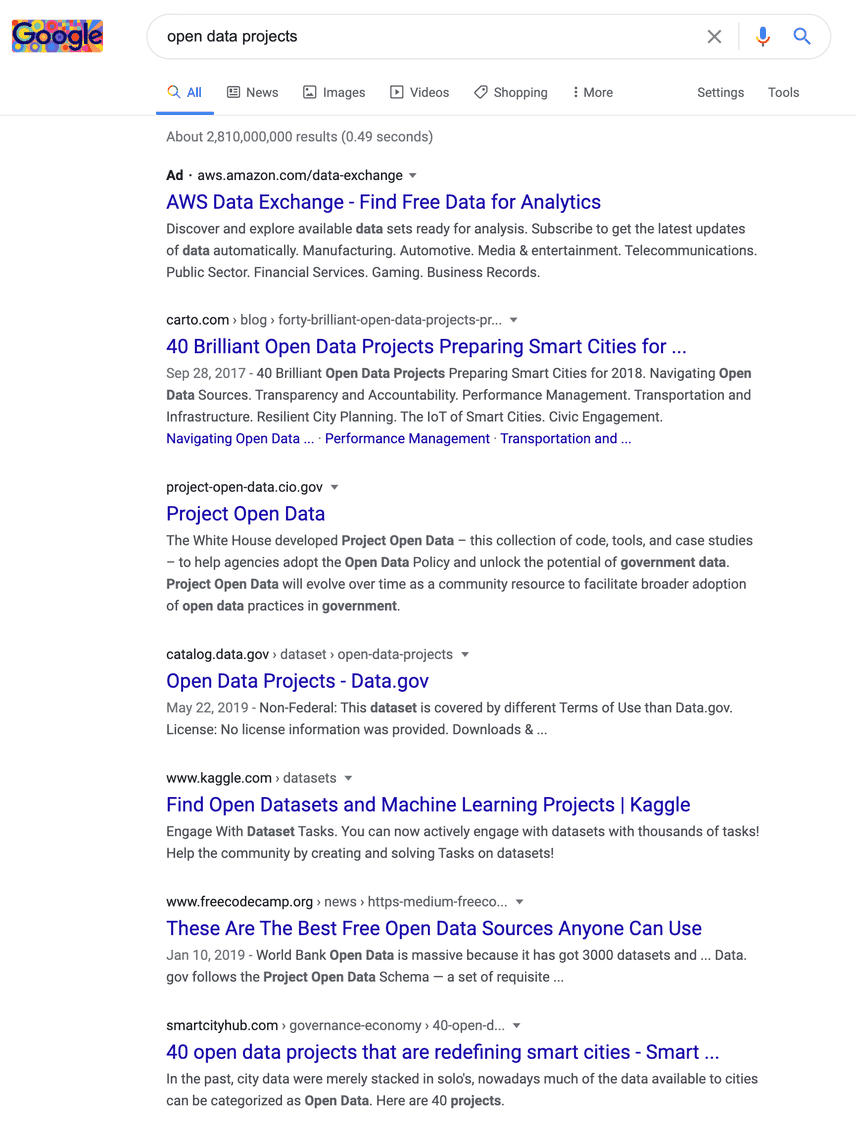

To start to gauge the relevance of this analogy at the level of "project dynamics", I performed a quick Google search:

The first, non-paid, article is a blog by a SaaS business focused on "spatial data science", and so while data sources may be interesting, they are picked because they compliment Carto's business thesis of the value of geo spatial data. Furthermore, most of the identified "projects" are the work of an individual or organization publishing, rather than the kind of distributed collaboration we identified as the core of the open source software development model.

The next two links are initiatives by the government to make data publicly available. While government data can be great, the existence of single publisher is the essence of its value. We then have Kaggle, a well known platform for machine learning competitions. Since a necessary prerequisite for a machine learning competition is a dataset, Kaggle hosts a lot of free datasets. However, they tend to be tailored toward the competition, and most are not updated, nor is there any concept of collaboration on a single dataset. We then reach some summary articles, probably what we were really looking for, that identify interesting and compelling "open data." However, again these data sources are mostly organizations and governments, or quasi government, entities publishing.

It seems pretty clear that the term "open data" often just means "available data", a one directional relationship where individuals and organizations can consume a data source passively. But how are organizations that have a shared interest in a dataset that is necessary but not a core competitive advantage supposed to collaborate?

Let's dig into an example.

Open Elections

One interesting open data project that looks bit like open source software is Open Elections. We have blogged about the project in the past. Recapping, the goal of the project is simple:

Our goal is to create the first free, comprehensive, standardized, linked set of election data for the United States, including federal and statewide offices.

Interest in voting data is broad, and is driven by both professional and personal motivations. Furthermore, many organizations have an interest in voting data, but lack the financial resources to buy it. Many commercial grade solutions, such as the associated press, are tailored to large media organizations with big budgets and low latency tolerance. This is not a good trade off for researchers or campaigns. Open Elections seeks to democratize access to this data by making high quality precinct level results available.

Perhaps because of wide interest in the data there is a sizable base of contributors. It's not hard to imagine that a researcher or enthusiast sees the Open Elections homepage, and realizes that with a small outlay of effort they can extend the dataset to meet their needs. They convert from a user to a contributor. This looks much more like open source software than random quasi-governmental agencies pushing out collections of CSVs whenever they see fit. To be clear, this is not to be scoffed at, but it's an entirely different model of data distribution.

Open Elections is managed via GitHub, which makes sense as that's the most notable platform around for administering a project with this dynamic. It's also free to use, widely understood, and the product is mature and well executed. However, one shortcoming of using data in a format such as CSV is that the format itself is "loose", it is after all a file format. Databases are a file format in a sense, but differ hugely in they require a program to edit the format. In essence that program, the query engine, "gates" interactions with the format, which in turn enables much stronger "guarantees" to be presented. These guarantees are described by a schema, and enable the use of a query language. Dolt uses SQL. Datasets store in a database are much more work to build than collections of data files separated by commas, but when data arrives in a database the time to value is radically compressed. We published a subset of the Open Elections data as a Dolt repository, which does a nice job of illustrating the power of the distribution model.

This is the core value proposition of Dolt to the open data community, it offers mechanism for building high quality production grade resources, and delivering a uniquely seamless consumption experience to users of the data.

Moving Forward

Putting data in relational databases is hard. The analogy to open source software would be unit tests. While the existence of such a "forcing function" to quality makes the life of the contributor harder by raising the barrier to creating a successful contribution, it also greatly elevates the experience of the marginal user.

That forcing function, using a database with Git-like features for managing open data project, is essential to our vision for Dolt and DoltHub in the open data world. We see Dolt the database, and the collaboration features built on top of it for DoltHub, as having the potential to elevate the quality of the data assets that come out of open data projects such that businesses are more willing to rely on them, and therefore invest in the projects themselves.

Conclusion

To recap, we have used the analogy of open source code, and Git and GitHub, to articulate the goals of Dolt and DoltHub in many places, including this blog. While the analogy is a powerful communication tool, it does tend to gloss over the key differences between the dynamics at play in collaborative process design, open source software, and collaborative fact assembly, open data.

One reason that we believe open data projects have not taken off is lack of tools that make it easy for contributors to publish high quality data assets that clear the threshold of quality such that commercial enterprises see them as viable alternatives to proprietary and paid solutions. Dolt and DoltHub represent our attempt to facilitate collaboration that creates high quality data assets that can become reliable dependencies.