The European Union (EU) leads the world in technology regulation. The regulation is well-meaning, usually meant to protect people from harm or being mislead. Having the EU set standardized regulation is generally better than having each of the 27 member nations set individual regulations. And its influence is ubiquitous. Why does every website ask you if it can use cookies? You have the EU’s General Data Protection Regulation (GDPR), Article 7 to thank for that.

Now, the EU is back with Artificial Intelligence (AI) regulation. The EU AI Act was passed in May 2024 and is set to be enforced starting August 2026. Will this regulation help? This remains to be seen. But if you’re building artificial intelligence in Europe, you must start thinking about compliance.

Dolt, the world’s first and only version-controlled SQL database, can help. This article explains.

EU AI Act#

The EU AI Act is a set of regulations meant to govern the development and deployment of artificial intelligence systems in Europe. The regulations define which AI systems it applies to and a set of rules for systems in scope.

Brief History#

The EU AI Act was passed May 2024. It’s a hefty, complicated law. It contains 180 Recitals (preambles explaining intent), 113 Articles (the legally binding provisions) and 13 Annexes (additional technical specifications). Here is the full text. The act comes into effect soon: August 2026.

Article (1) provides a good summary of the scope and intent:

1 . The purpose of this Regulation is to improve the functioning of the internal market and promote the uptake of human-centric and trustworthy artificial intelligence (AI), while ensuring a high level of protection of health, safety, fundamental rights enshrined in the Charter, including democracy, the rule of law and environmental protection, against the harmful effects of AI systems in the Union and supporting innovation.

2. This Regulation lays down:

(a) harmonised rules for the placing on the market, the putting into service, and the use of AI systems in the Union;

(b) prohibitions of certain AI practices;

(c) specific requirements for high-risk AI systems and obligations for operators of such systems;

(d) harmonised transparency rules for certain AI systems;

(e) harmonised rules for the placing on the market of general-purpose AI models;

(f) rules on market monitoring, market surveillance, governance and enforcement;

(g) measures to support innovation, with a particular focus on SMEs, including start-ups.

In the News…#

Here at DoltHub, we were somewhat aware of the law. But recently, news of potential delayed enforcement from August 2026 until January 2027 brought it to some of our European users’ attention. We had to dig deeper.

Systems in Scope#

Not all AI systems are in scope for the the EU AI Act regulation. The law applies to “High-Risk AI Systems”. The act defines “High-Risk AI Systems” in Annex III. A high-risk AI system is an AI deployment in biometrics, critical infrastructure, education, employment, government benefits, credit, health insurance, law enforcement, migration, and justice.

Moreover, Article 51 defines any sufficiently large general-purpose model as carrying systemic risk. Thus, general models like ChatGPT or Claude, called general-purpose AIs in the law, are subject to this regulation as well. Though, the set of regulations for these systems is different than high-risk AI systems.

Regulation Summary#

A summary of the law is provided by the Future of Life Institute, a nonprofit that worked with the EU to develop the AI Act.

An even higher-level summary of the law is high-risk AI systems are subject to additional rules pertaining to the data used to train the AI models and the way the output of the AI models is used. Some of the laws are basic hygiene requirements like documentation and logging while others are a bit more onerous requiring audit trails and human oversight.

General-purpose AIs have simpler requirements. They must provide technical documentation, comply with the copyright laws, and publish a summary of the content used for training.

Data Version Control and Compliance#

Here at DoltHub, we built the world’s first version-controlled SQL database called Dolt. A version-controlled database enables compliance for two articles in particular:

- Article 10: Data and Data Governance

- Article 14: Human Oversight

What is Dolt#

Dolt is a SQL database that you can fork, clone, branch, merge, push and pull just like a Git repository. Git versions files. Dolt versions tables. Dolt is like Git and MySQL had a baby. Dolt produces a database-enforced audit log of every cell in your database, a dream feature for your compliance team.

One of Dolt’s traditional use cases is versioning AI training data. One prospective use case is to use Dolt to produce diffs of AI-generated content for human review. This makes Dolt ideal to help with compliance for the new EU AI Act’s data governance and human oversight requirements.

Data and Data Governance#

Training and testing data for high-risk AI systems are subject to additional governance requirements. I read the law such that at the very least, you must be able to produce the data that you used to train or test a model. Once the data is produced, you must assert it is free of bias and error. You must be able to identify the data’s origin and provide an audit log of “data-preparation processing operations” like labeling and cleaning.

Dolt can be used to manage your training and test data providing instant, queryable access to the data used to train any of your models. Whenever you train or test a model, you note which commit or tag (ie. a named commit) was used. This is an immutable record of the data used. You can query this data just like the current copy of your database. Each commit must have an author and can include a description of what changed further enhancing auditability. If you would like to see what changed between two versions of the data, you can use Dolt’s diff functionality. Dolt’s diff functionality operates at the cell level so you get fine-grained data diffs across versions.

Human Oversight#

High-risk AI systems are required to be built such that “they can be effectively overseen by natural persons during the period in which they are in use”. In particular, high-risk AI systems must provide the ability “to decide, in any particular situation, not to use the high-risk AI system or to otherwise disregard, override or reverse the output of the high-risk AI system”. A human must be able to review AI output before it is accepted. A human must be able to rollback changes made by an AI system.

Dolt can be integrated into your AI-powered application to provide human review in a “Pull Request”-style workflow. As described in our Cursor for Everything article, if you migrate your application to use a Dolt backend, AI can insert content on a branch. Your application can present the diff to the user for review. If the AI response if acceptable, you merge it. Otherwise, you simply delete the branch. This setup allows for you to guarantee human review of AI output.

Rolling back any change is also straightforward using Dolt’s Git-like functionality. You can rollback an individual change using revert. You can move the whole database back in time using a reset. Or you can create a new branch at an older commit using checkout, switch your application to use that branch, and test. You have the full power of Git’s rollback capabilities on your database.

Hypothetical Case Study: Flock Safety#

Based in Atlanta, Georgia and primarily servicing the US market, Flock Safety builds a full-service, end-to-end safety solution for neighborhoods, properties, schools, and businesses. Flock works with its clients to install network connected infrastructure-free cameras and audio detection devices on premises. Flock uses AI to decode data captured from these devices into actionable evidence. Using these machine learning models, Flock can alert when a known suspect vehicle drives past a camera or enable investigators to search for suspect vehicle history. This information is used by law enforcement to identify, apprehend, and prosecute the suspect.

In this hypothetical, Flock Safety would like to expand into the European market. Flock’s solution is a high-risk AI system subject to the EU AI Act because their solution deals with law enforcement.

Flock Safety uses Dolt to version and manage all of their machine learning data. The exact details of Flock’s Dolt setup are in this linked article.

Let’s summarize a few key details here. All of Flock’s visual and audio training data is stored in Dolt. Labeled data is inserted into Dolt and Dolt commits are created. Whenever a model is trained, the data used to train the model is given a Git-style tag (ie. named commit) such that a permanent snapshot of the data can be referenced via that tag. Thus, Flock has a queryable audit log of all training data changes with annotations at the exact points models were trained.

Dolt would make compliance with the EU AI Act’s data and data governance regulations simple. Here is a hypothetical interaction between Flock’s compliance team and Flock’s engineering team.

- What training data was used to build this model?

When we train a model, we record a Dolt tag of the data used to train it. Every model version maps to a specific data snapshot. The model tag is model-2026-01-28 for the model train on January 28, 2026. What SQL queries would you like me to run on the data to produce finer-grained details?

- The EU AI Act is particularly interested in biased data. Can you prove no biased data entered the model?

Flock training data consists of images of automobile’s license plates. An additional column, has_person has been added to the training data indicating whether or not a person is in the image or not. We do not train models on images with humans present. To ensure bias free training data, the SQL query select count(*) from training_images as of 'model-2026-01-8' where has_person=1 returns 0. Dolt supports as of syntax for easy querying of data at past points in history including tags.

- A week later, Dolt tests detect a record with

has_personequal to true. When did this record enter our training set?

A join of dolt_diff_training_images and dolt_log system tables identified the erroneous commit including the exact timestamp and author. The commit was reverted, removing the biased data before a model was ever trained. The commit author was contacted to further root cause the situation.

┌─────────────────────────────────────────────────────────────┐

│ Week 1: Model Training │

│ Tag created: model-2026-01-28 │

│ Training data: 50,000 images, all has_person=0 │

└───────────────────────────┬─────────────────────────────────┘

│

↓ Model deployed

┌─────────────────────────────────────────────────────────────┐

│ Week 2: Quality Control Tests │

│ Dolt test detects: |

| image_51247 has has_person=1 │

└───────────────────────────┬─────────────────────────────────┘

│

↓ Compliance investigation

┌─────────────────────────────────────────────────────────────┐

│ Root Cause Analysis │

│ │

│ SELECT * FROM dolt_log │

│ JOIN dolt_diff_training_images │

│ WHERE image_id='image_51247'; │

│ │

│ Result: │

│ - Added by: labeler-smith@flock.com │

│ - Date: 2026-02-03 (AFTER model training) │

│ - Commit: a3f9c2d "Batch import Slovenia data" │

└───────────────────────────┬─────────────────────────────────┘

│

↓ Remediation

┌─────────────────────────────────────────────────────────────┐

│ Action Taken │

│ │

│ CALL DOLT_REVERT('a3f9c2d'); │

│ → Biased image removed before next training │

│ │

│ Labeler contacted for retraining │

│ Model-2026-01-28 confirmed clean │

└─────────────────────────────────────────────────────────────┘As you can see, Dolt is a compliance team’s ideal system for data governance of AI training data.

Hypothetical Case Study: Nautobot#

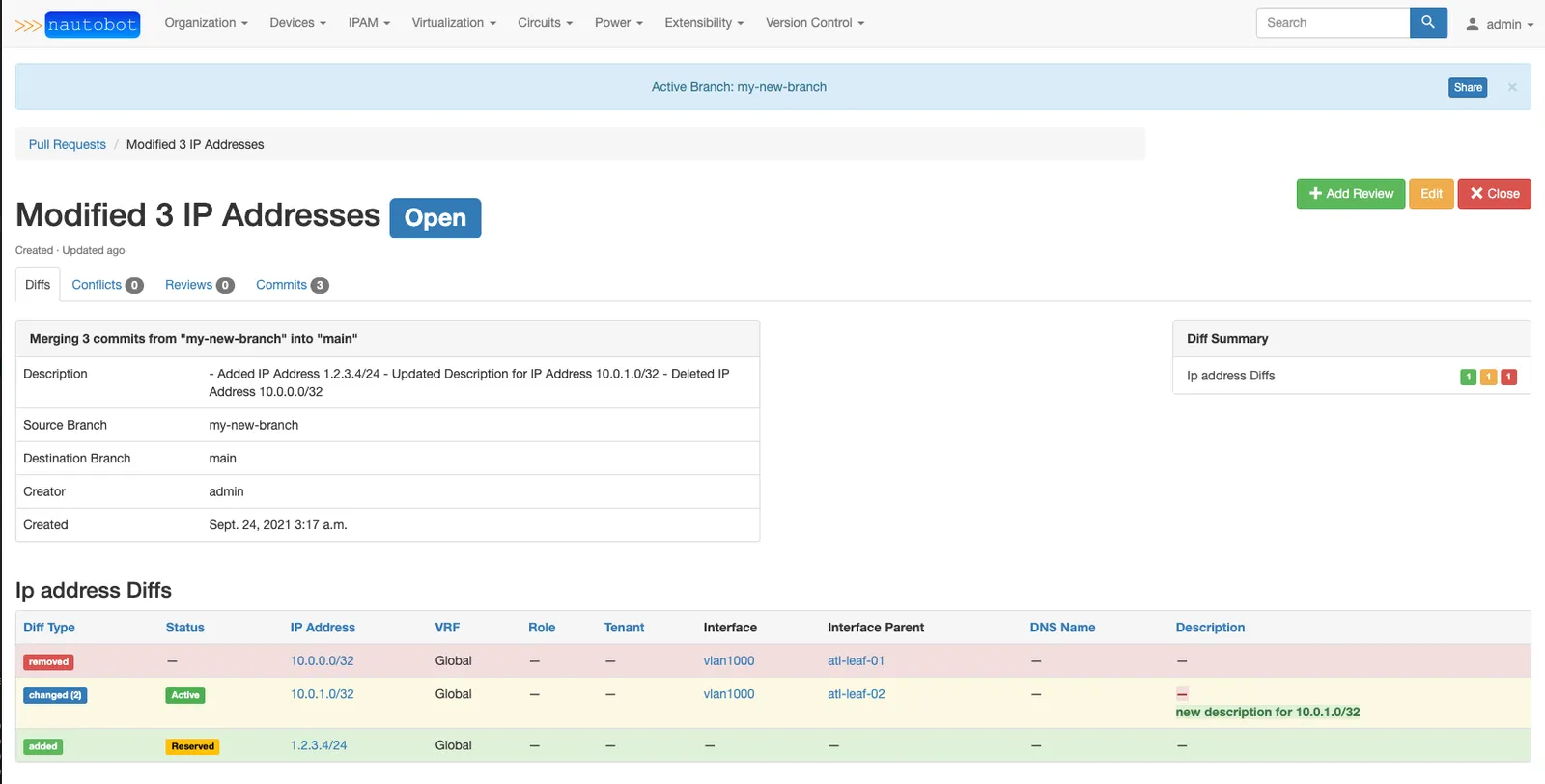

Network To Code are the creators of a network configuration management tool called Nautobot. Nautobot has long used Dolt to support a Pull Request-style review workflow.

In this hypothetical, a large European Internet Service Provider (ISPs) has built an AI-powered network manager. Because ISPs are critical infrastructure, the new network manager is subject to the EU AI Act. Thus, any changes this AI-powered network manager suggests must be human reviewed.

The large ISP finds Nautobot. It’s Pull Request workflow, powered by Dolt, is perfect. The agentic network manager can suggest changes on branches and a human operator can review and approve the changes. Nautobot and Dolt allow the ISP to comply with the EU AI Act.

┌─────────────────────────────────────────────────────────────┐

│ AI-Powered Network Manager │

│ (watches network, detects problems) │

└───────────────────────────┬─────────────────────────────────┘

│

↓ Proposes fix

┌─────────────────────────────────────────────────────────────┐

│ Nautobot (web application) │

│ - Shows network topology │

│ - Manages device configs │

│ - Provides UI for engineers │

└───────────────────────────┬─────────────────────────────────┘

│

↓ Stores data in

┌─────────────────────────────────────────────────────────────┐

│ Dolt │

│ │

│ main branch (PRODUCTION) │

│ ├─ Router-47 config │

│ ├─ Switch-203 config │

│ └─ Firewall-12 config │

│ │

│ ai-proposal-branch (UNDER REVIEW) │

│ └─ Router-47 config MODIFIED ← AI change │

└───────────────────────────┬─────────────────────────────────┘

│

↓ Human engineer reviews

┌─────────────────────────────────────────────────────────────┐

│ Engineer Decision │

│ │

│ Approve → MERGE → Goes to production │

│ Reject → DELETE → Nothing changes │

│ Modify → EDIT → Then merge │

└─────────────────────────────────────────────────────────────┘The human’s review interface is built directly into the Nautobot web application.

Conclusion#

Dolt enables you to comply with the data governance and human oversight requirements of the EU AI Act. Questions? Come by our Discord and we will be happy to answer.