Cursor Agent was released last week on August 7. I compared all the coding agents in a recent article using real world tasks on our open-source version-controlled SQL database, Dolt. Claude Code came out victorious. At the time, the only Cursor product available was the Cursor IDE. The Cursor IDE, even in agent mode, felt more like a coding partner than a coding agent. I was excited to try the new Cursor Agent command line interface to see if Cursor had improved their agentic experience.

How does Cursor Agent stack up using similar tasks? Very well. Cursor Agent is an exciting to see a challenger to Claude Code. This article explains how I came to that conclusion.

The Tasks#

These will feel familiar for those who read my last comparison article. In that article I chose two tasks, a bug and a more ambiguous task. The ambiguous task can stay the same. It is evergreen until we run out of skipped BATS tests. The bug fix has long been merged so I had to choose a new bug for Claude Code and Cursor Agent to compete on.

The Bug#

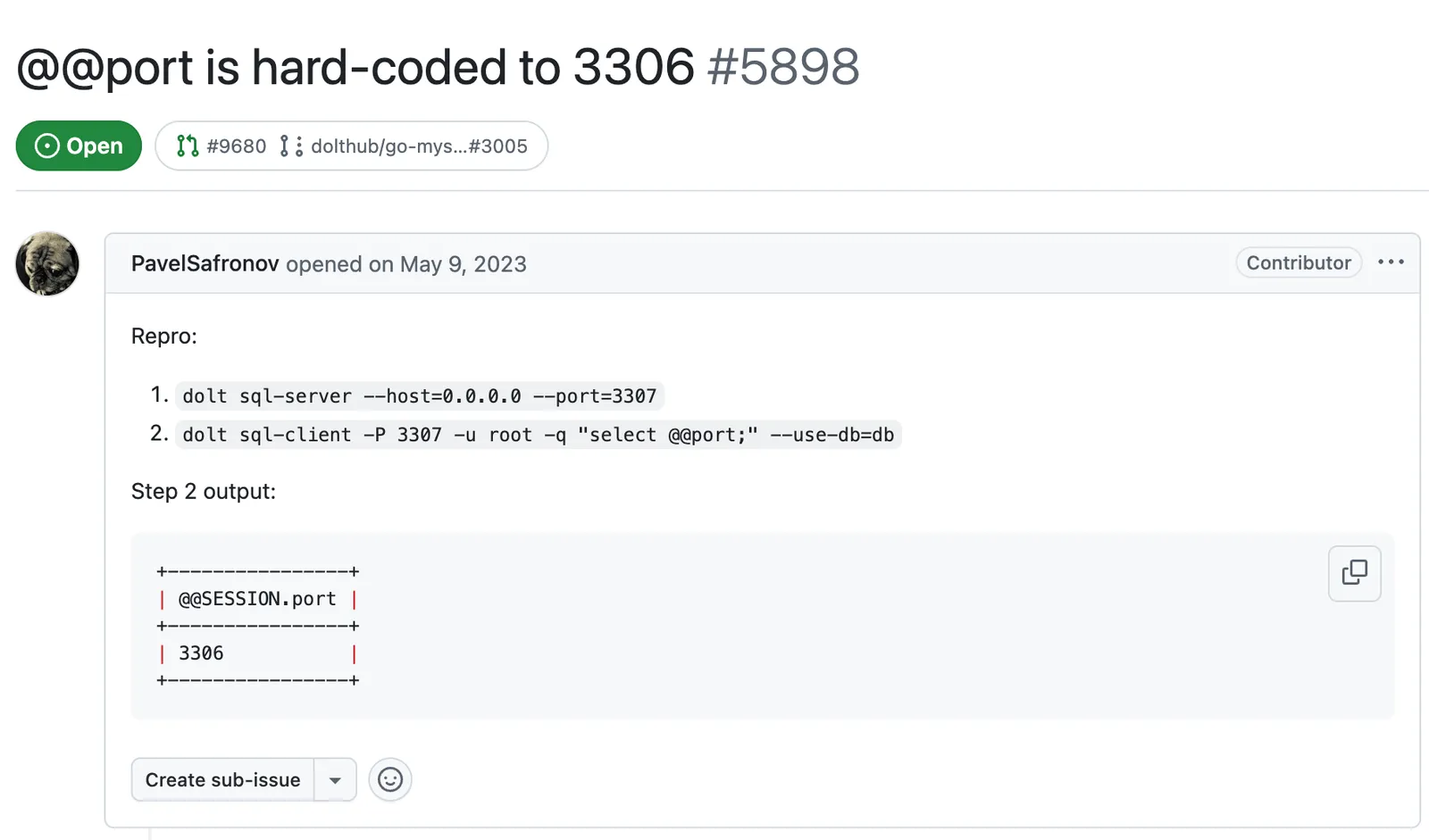

The first test is a simple bug fix. The prompt I used was:

Fix this bug: https://github.com/dolthub/dolt/issues/5898This test judges the agent’s ability to create simple code from a loosely specified issue.

The Ambiguous Task#

The goal of this test is to unskip a single Bash Automated Testing System (BATS) test. Dolt uses BATS to test command line interactions.

The working directory contains the open source projects Dolt, go-mysql-server, and vitess.

Your goal today is to fix a single skipped bats test as defined in dolt/integration-tests/bats.

bats is installed on the system and can be invoked using bats /path/to/file/tests.bats.

Make sure you compile Dolt before running the tests.

A single test can be run using the -f option which is much faster than running the whole test file.

Focus on making a very simple change.

SQL behavior is a bit harder to fix because it is defined in go-mysql-server and vitess.This test judges whether the agents can search a codebase and use that knowledge to differentiate a simple fix from a hard fix.

As a reminder…#

This is how Cursor in IDE mode graded out in my article where I compared Gemini, Cursor IDE in Agent Mode, Codex, and Claude Code.

| Overall | B- |

|---|---|

| Interface | C+ |

| Speed (Code/Prompt) | D |

| Cost | A |

| Code Quality | B |

Claude Code was the champion and this was it’s final ratings. Claude Code graded out as a A overall.

| Overall | A |

|---|---|

| Interface | B+ |

| Speed (Code/Prompt) | B+ |

| Cost | C- |

| Code Quality | A |

Cursor Agent#

| Overall | A |

|---|---|

| Interface | B+ |

| Speed (Code/Prompt) | A- |

| Cost | A |

| Code Quality | A |

Task #1: The Bug#

Cursor Agent was able to produce working code I was satisfied with in about 15 minutes using just two prompts:

Fix https://github.com/dolthub/dolt/issues/5898- Write Go and BATS tests.

The Pull Request received feedback from my colleague about the hard coded port in the test. Cursor Agent was able to address it with the following prompt Address the feedback in PR: https://github.com/dolthub/dolt/pull/9680. Cursor Agent’s performance was impressive here.

Task #2: The Ambiguous Task#

Cursor Agent was very impressive here. The other agents I tried had a hard time a month ago identifying easy tests to unskip, even Claude Code. They got stuck on hard to fix bugs or at best, had to modify code to fix tests.

Cursor Agent was able to identify two very easy to unskip tests almost immediately. The first was identified because the skip was at the end of the test, which I had never seen before and was very clever. The second was in a similar test suite, and Cursor Agent had identified that the logic around primary keys had improved such that the tests would pass. It then identified that new tables are not committed if you pass -a to dolt commit and thus added an explicit dolt add. The change fixed the test. I had been running this “unskip a BATS test” test on coding agents for months and thought all the low hanging fruit had been picked. Cursor Agent surprised me.

I wanted it to keep going and actually fix a test that involved code. It first picked a foreign key bug that occurs when foreign_key_checks = 0. It tried to fix it for about 3 hours before giving up. First, it changed the test to match MySQL’s behavior and implemented fixes to match that behavior. This was good. But, I prompted it to run the Go engine tests in go-mysql-server and it had broken many of those. It iterated for a very long time trying to fix the Go tests and could not. Eventually, it asked “what exactly are we trying to accomplish here?” This was my cue to bail.

Finally after a bit more prompting, it fixed a LOAD DATA bug in go-mysql-server involving default column values. The performance here was also very good and very reminiscent of working with Claude Code to fix bugs across Dolt and go-mysql-server.

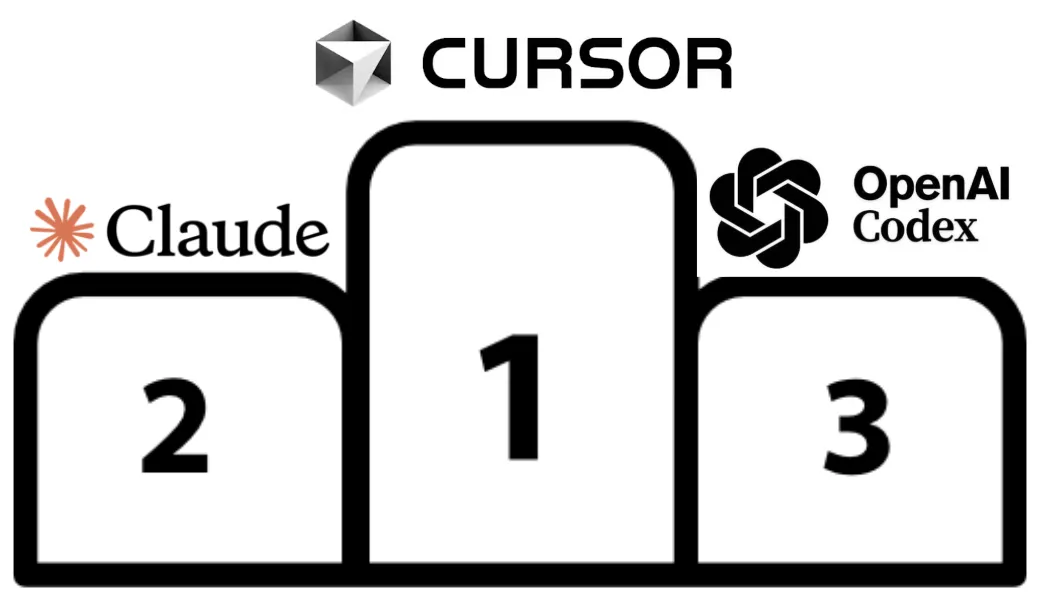

Commentary#

Cursor Agent is the new leader in the clubhouse as far as coding agents are concerned. Cursor Agent is a major upgrade over Cursor IDE agent mode. I would mark down Claude Code to an A- in my previous analysis if I had seen Cursor Agent. Cursor Agent produces slightly better code at approximately 1/10th the cost, earning an overall A grade from me.

Coding speed (Code/Prompt) is slightly better than Claude Code. GPT-5, the default model for Cursor Agent, seems a tad smarter than the model Claude Code uses. This is barely noticeable but Cursor Agent needs fewer interjections. I had one experience, the skip at the end of test, where I thought the model was increasingly clever. Cursor Agent knows to run single tests, speeding up iteration speed. Cursor Agent is also more lax with permissions checks which speeds up iteration speed initially. I’ll discuss this “feature” more when I talk about the interface.

Cursor Agent handily beats Claude Code on cost. I had to sign up for Cursor Pro for $20/month after the second BATS test fix. $20/month even for the task I completed is much less that the $10/hour I pay for Claude Code. Looking at the dashboard, the approximately 8 hours of work I did cost me $8.94. That’s a 10X reduction in cost from Claude Code for similar if not better performance. Anthropic should be worried.

The big interface improvement over other agents is fewer permissions checks for shell commands. In general, Cursor Agent asks for permission far fewer times and has a very useful “allow all” option. Cursor did invent YOLO mode after all. On the downside, the interface has a few readability issues that I’m sure will be quickly fixed. For instance, deletions show red on a black background by default. The explanations and progress reporting are on par with Claude Code. Code changes are clearly displayed and easy to review.

The code produced by Cursor Agent was clean. On code quality, it was hard to see a discernable difference between Claude Code. Both Cursor Agent and Claude code produce good quality code that is indistinguishable from each other.

Conclusion#

We have a new coding agent champion, Cursor Agent! Cursor Agent produces slightly better results than Claude Code at 1/10th the cost. Our team is switching over as I type this. Interested in agents? You may also be interested in Dolt, the database for agents. Come by our Discord to learn more.